Multivariate Testing vs. A/B Testing: Key Differences

When deciding between A/B and multivariate testing for email campaigns, it comes down to your goals, audience size, and timeline. A/B testing is simple: you test one variable (like subject lines or button colors) to find what works best. It’s ideal for smaller audiences and quick insights. Multivariate testing, on the other hand, examines how multiple elements (like headlines, images, and CTAs) work together. It’s better for large audiences and optimizing complex designs but takes more time and traffic.

Here’s a quick breakdown:

- A/B Testing: Tests one change at a time. Great for small audiences and fast results.

- Multivariate Testing: Tests multiple changes simultaneously. Best for large audiences and detailed improvements.

Quick Comparison:

| Feature | A/B Testing | Multivariate Testing |

|---|---|---|

| Variables Tested | One | Multiple (2+) |

| Traffic Needed | Low (~2,000 visitors) | High (12,000+ visitors) |

| Speed to Results | Fast (days) | Slow (weeks/months) |

| Complexity | Simple | Advanced |

| Goal | Identify single winner | Find best combination |

Start with A/B testing for big changes, then use multivariate testing to refine details once you have a strong foundation. Both methods can improve your campaigns when used strategically.

A/B Testing vs Multivariate Testing: Complete Comparison Guide

AB Testing vs Multivariate Testing

What is A/B Testing?

A/B testing helps you figure out what works best in your email campaigns. The process involves creating two versions of an email that differ by just one feature - like a subject line or a call-to-action (CTA) button - and sending them to separate audience segments. By keeping everything else the same, you can pinpoint the exact factor influencing performance.

This approach is straightforward: testing a single variable allows you to clearly identify cause and effect. If Version A outperforms Version B, you’ll know exactly what made the difference. The results are easy to understand, even if you’re not an expert in data analysis.

"Email marketing is much harder without A/B testing. Your campaigns will not improve without learning what works and what doesn't." - MailerLite

The effectiveness of A/B testing is well-documented. For example, Barack Obama’s 2007 campaign used it to increase sign-up rates by a whopping 40.6%, resulting in an estimated $60 million in additional donations. Similarly, a Bing test in 2012 boosted ad revenue by 12% simply by tweaking advertising headlines.

These examples show how impactful A/B testing can be. But how does it actually work?

How A/B Testing Works

The process involves dividing your email list into two groups, sending each group a different version of the email, and analyzing the results. You’ll focus on one variable to test - like the subject line, the color of a CTA button, or even the sender name - while keeping all other elements constant. Both versions are sent at the same time, and metrics like open rates, click-through rates, and conversions determine the winner.

For larger email lists (over 1,000 subscribers), many platforms apply the 80/20 rule: 10% of your audience receives Version A, another 10% gets Version B, and the remaining 80% receive the winning version. To ensure reliable results, aim for at least 1,000 recipients per variation and a 95% confidence level before declaring a winner.

Now that you know how it works, let’s look at when A/B testing is most useful.

When to Use A/B Testing

A/B testing shines when you want to test specific elements like subject lines, CTA wording, button colors, or even the best time to send your emails. For instance, you might compare using a person’s name in the "From" field versus a company name or test a straightforward subject line like "50% Off Today" against something more creative.

This method also works well for bigger decisions, like selecting between two completely different email designs or deciding whether to include video content instead of static images.

Tech giants have embraced A/B testing on a massive scale. In 2011, Google conducted more than 7,000 tests, and today, both Microsoft and Google run over 10,000 tests annually. Even Google’s famous experiment with 41 shades of blue for hyperlink text was an A/B test aimed at finding the color that generated the most clicks - and, ultimately, the most ad revenue.

| Metric | What It Measures | Best Used For Testing |

|---|---|---|

| Open Rate | Percentage of recipients who opened emails | Subject lines, sender name, preview text |

| Click-Through Rate (CTR) | Percentage of recipients clicking links | Button color, CTA text, images, layout |

| Unsubscribe Rate | Percentage of recipients who opted out | Content relevance, email frequency |

| Conversion Rate | Percentage completing a goal (e.g., purchase) | Offers, discount codes, landing page alignment |

What is Multivariate Testing?

Multivariate testing (MVT) takes a step beyond A/B testing by analyzing multiple variables and their combinations at the same time to determine which setup delivers the best results. Instead of comparing just two versions, MVT evaluates how different elements work together.

"Multivariate testing (MVT) compares multiple variations of several page elements at once to find the combination that drives the best results." - ActiveCampaign

The real strength of MVT lies in uncovering how different elements interact. For instance, a red call-to-action (CTA) button might boost clicks when paired with one headline but could decrease them with another.

Compared to A/B testing, MVT is more intricate. It requires thorough preparation and advanced analysis. While A/B testing is perfect for testing major changes or new ideas, MVT is better suited for fine-tuning content that’s already performing well. Together, these methods provide a comprehensive approach: A/B testing sets the stage, and MVT refines the details.

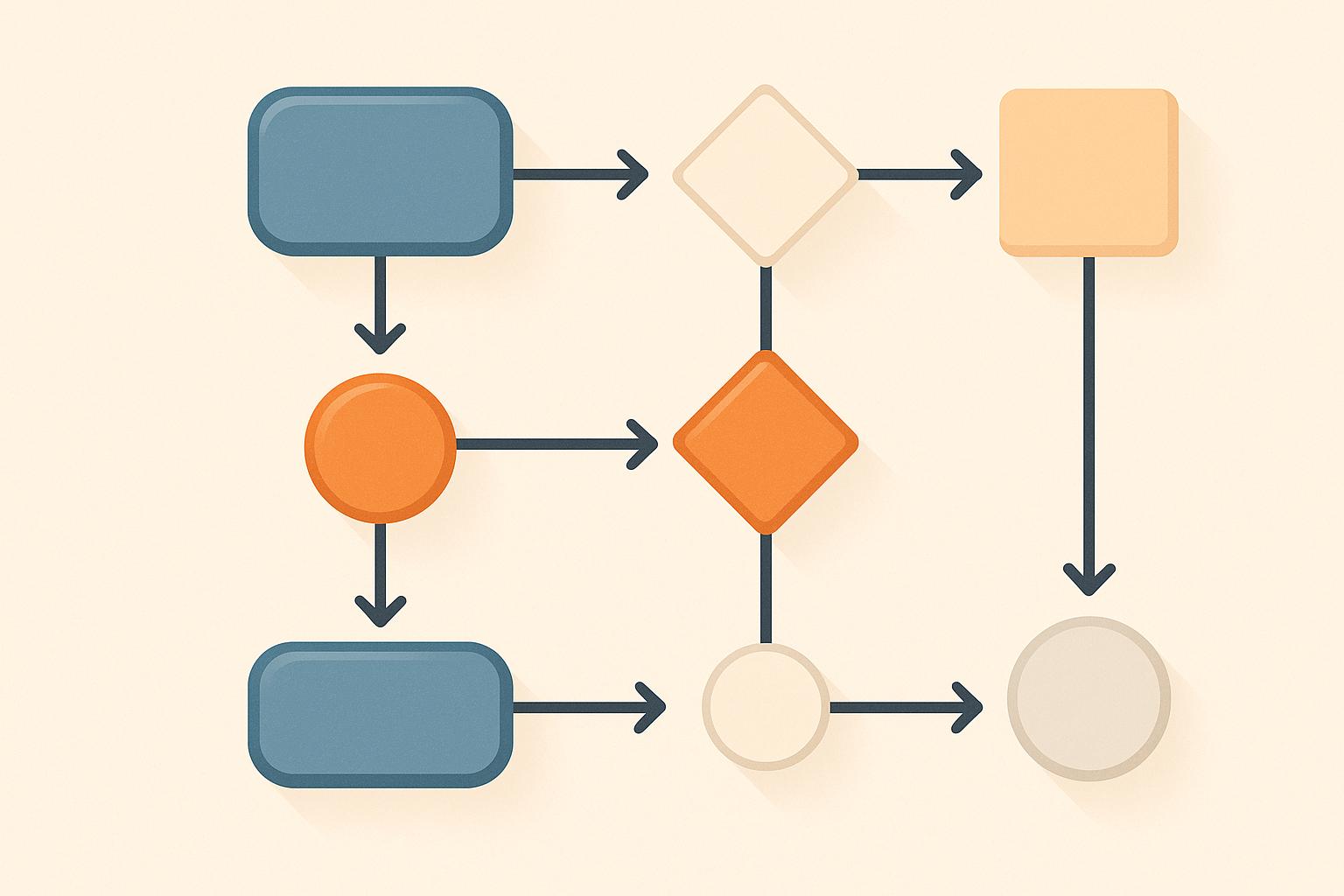

How Multivariate Testing Works

Unlike A/B testing, which focuses on one variable at a time, MVT evaluates several elements together. The process begins by selecting the elements you want to test - such as headlines, images, or CTA buttons. The number of combinations is calculated by multiplying the variations of each element. For example, testing 2 headlines, 3 images, and 2 button colors results in 12 combinations (2 × 3 × 2 = 12).

Your audience is then divided among these combinations. However, since traffic is spread across smaller groups, MVT requires a larger sample size to achieve reliable results. A general rule is at least 1,000 visitors per combination. For a 12-combination test, this means you’d need at least 12,000 visitors.

The complexity of MVT increases quickly. For instance, testing five elements with three variations each results in 243 combinations, which could take months to gather enough data. To manage this, focus on testing fewer but more impactful elements - like subject lines, hero images, or primary CTA buttons.

When to Use Multivariate Testing

MVT is most effective when you’re working with a large, high-traffic audience - typically 10,000 or more subscribers or visitors - and want to optimize intricate layouts, such as email designs. It’s particularly useful for testing how combinations of subject lines, images, and CTAs perform together rather than separately.

This method is ideal for gaining insights into how different elements interact. For instance, a specific hero image might deliver outstanding results with one subject line but fall flat with another. These insights allow you to make precise, incremental improvements to content that’s already performing well.

If you’re in the early stages of testing or have limited traffic, start with A/B testing. Use it to explore big changes or validate a general direction. Once you have a solid foundation, turn to MVT to refine the details. Just make sure every combination you test makes sense - avoid mismatched elements, like a headline referencing details that only appear in one test image.

Key Differences Between A/B Testing and Multivariate Testing

Both A/B testing and multivariate testing aim to improve performance, but they differ in how they approach the task. A/B testing focuses on isolating one variable at a time, making it easier to pinpoint cause-and-effect relationships. On the other hand, multivariate testing examines multiple variables at once - like testing various headlines, images, and buttons simultaneously - to find the best combination. These differences shape how each method handles traffic, speed, and complexity.

"The critical difference is that A/B testing focuses on two variables, while multivariate is 2+ variables." - Corey Wainwright, HubSpot

For traffic, a typical A/B test requires around 2,000 visitors per week, while a multivariate test with 12 combinations needs at least 12,000 visitors. This is because multivariate testing splits traffic across all the combinations being tested.

Speed is another major factor. A/B tests can deliver results in a matter of days, sometimes even hours. Multivariate testing, however, often takes weeks or months to gather enough data due to the larger number of combinations. Additionally, the setup and analysis for A/B testing are relatively simple, while multivariate testing demands more advanced planning and statistical expertise. These factors highlight the importance of choosing the right method for your specific needs.

Comparison Table: A/B Testing vs. Multivariate Testing

| Feature | A/B Testing | Multivariate Testing |

|---|---|---|

| Number of Variables | One variable at a time | Multiple variables simultaneously (2+) |

| Traffic Requirement | Low to Moderate (~2,000 visitors/week) | High (at least 12,000 visitors/week) |

| Speed to Results | Fast; reaches significance in days or weeks | Slow; can take weeks to months |

| Complexity | Simple setup and analysis | High complexity; requires statistical expertise |

| Primary Goal | Identify which single version is better | Identify the best combination of elements |

| Insights Provided | Clear cause-and-effect for one change | Deep insights into element interactions |

How to Choose Between the Two Methods

The choice between A/B testing and multivariate testing depends on your audience size and goals. A/B testing is ideal for smaller audiences (under 100,000 monthly visitors), quick results, or when testing major layout changes. On the flip side, multivariate testing is better suited for high-traffic pages (at least 12,000 weekly visitors) and refining elements on a page that’s already performing well.

A smart strategy is to start with A/B testing for big changes. Once you’ve identified what works, you can switch to multivariate testing to fine-tune the details. Just make sure to avoid testing during periods of unusual traffic, as this can skew your results.

sbb-itb-8889418

Pros and Cons of Each Testing Method

Benefits of A/B Testing

A/B testing excels when you're looking for quick, straightforward insights and don't have a ton of traffic to work with. Its simplicity allows you to set up tests without much hassle, and results often come in fast - sometimes within just a few hours. In fact, predictions for winners can hit over 80% accuracy in that short time frame, whether you're measuring opens or clicks.

This method works particularly well for smaller email lists, making it accessible to most marketers. By focusing on just one variable at a time, you get a crystal-clear understanding of what caused the change in performance. Plus, it’s budget-friendly - you won’t need fancy tools or a specialized team to get started.

"A/B testing is great for speed and clarity. You get answers quickly and the results are easy to explain to people." - Peter Lowe, Crazy Egg

On the other hand, if you're looking to understand how multiple factors interact, multivariate testing might be the better choice.

Benefits of Multivariate Testing

Multivariate testing goes beyond what A/B testing can offer by showing how different elements work together. For instance, a headline that performs well with one image might flop with another, and MVT captures these nuances. Instead of running several A/B tests back-to-back, you can test multiple variables all at once, saving time in the bigger picture.

This approach is perfect for fine-tuning campaigns that are already performing well. If you have a solid email or landing page and want to squeeze out every bit of improvement, MVT provides the insights you need. It digs deep into the combinations of elements, uncovering winning formulas that A/B testing might overlook. This makes it especially useful for campaigns with larger audiences where even small gains can make a big impact.

Comparison Table: Pros and Cons

Here’s a side-by-side look at the strengths and limitations of each method:

| Testing Method | Advantages | Disadvantages |

|---|---|---|

| A/B Testing | Quick results; easy to set up; works with low traffic; clear cause-effect; cost-efficient | Limited to one variable at a time; doesn’t capture interactions between elements |

| Multivariate Testing | Identifies element interactions; tests multiple variables at once; ideal for detailed optimizations; efficient for large campaigns | Needs high traffic (12,000+ visitors/week); slower to reach conclusions; complex analysis; may require a skilled team |

Each method has its place, depending on your goals, audience size, and resources. A/B testing is your go-to for speed and simplicity, while multivariate testing shines when you're ready to dive into the details.

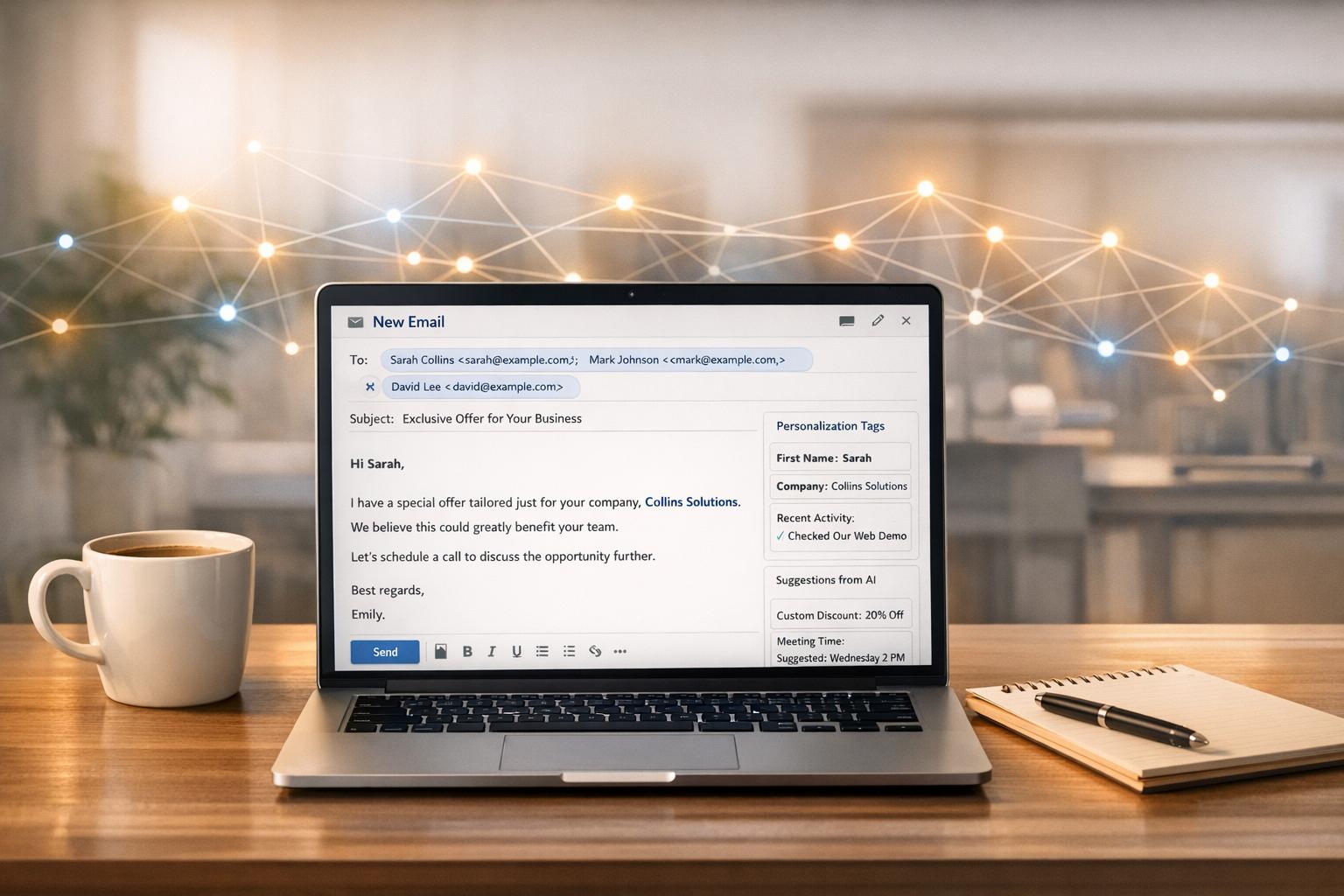

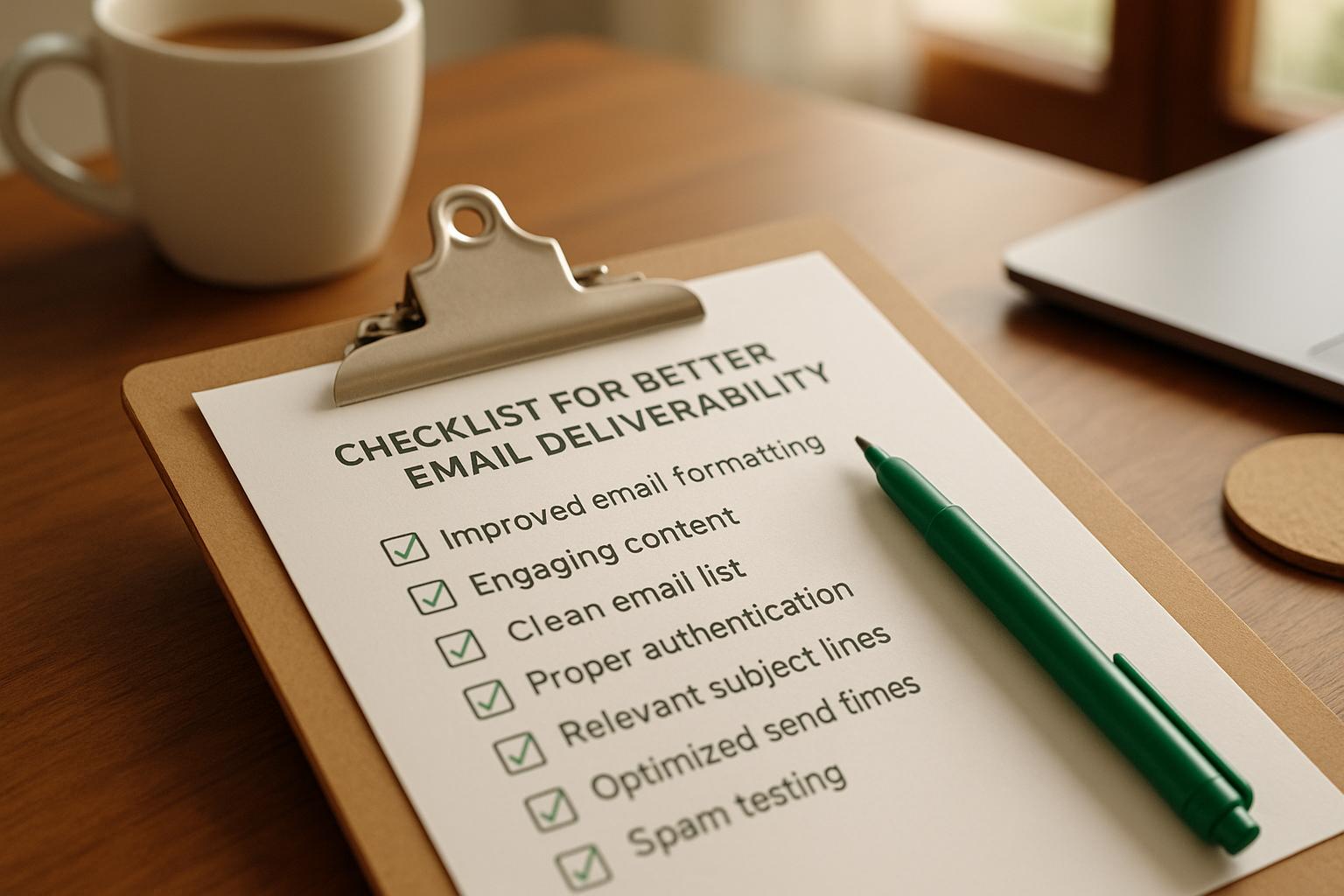

Using Breaker for Newsletter Testing

Breaker makes newsletter testing faster and easier. Whether you're running A/B or multivariate tests, the platform simplifies the process for campaigns of any size. Its real-time analytics dashboard tracks key metrics - like open rates, click-through rates, and conversion rates - while your campaign is live. This allows you to identify trends and address issues as they happen, keeping your testing process smooth and efficient.

For multivariate testing, Breaker takes care of the heavy lifting by automatically creating all possible email variations. For instance, if you're testing three different subject lines and two email designs, the system generates all six combinations for you, eliminating the need for manual setup.

The platform's Intelligent Selection feature ensures real-time optimization by identifying and deploying the best-performing version as the test runs. This means you can get the most out of your campaigns without waiting for the entire test cycle to finish.

Breaker also supports phased rollouts, allowing you to test with a small segment of your audience - say 5% or 25% - before rolling it out to your full list. This approach helps safeguard your sender reputation by catching potential issues early.

Additional features like automated lead generation and built-in deliverability management ensure your emails land in inboxes where they belong. Plus, with unlimited email validations and seamless CRM integrations, Breaker ensures your testing data integrates directly into your broader marketing systems. These tools make it easy to execute the testing strategies outlined earlier.

Conclusion

Deciding between A/B testing and multivariate testing hinges on your campaign goals, audience size, and available resources. A/B testing is a solid choice for evaluating single elements, especially if your audience size is smaller. On the other hand, multivariate testing works best for campaigns with high traffic, as it reveals how various elements interact, making it perfect for refining already successful campaigns.

After pinpointing your top-performing individual elements, you can focus on optimizing how they work together. Both testing methods can provide meaningful insights when aligned with your campaign's needs.

To make this process easier, consider using a platform that simplifies both approaches. For B2B marketers, Breaker offers a user-friendly solution that eliminates the technical hurdles. It automatically creates all email variations for multivariate tests, tracks performance in real-time, and uses Intelligent Selection to deploy winning versions while your campaign is live. Whether you're testing subject lines with a smaller audience of 2,000 subscribers or running intricate multivariate tests with 50,000 contacts, Breaker’s analytics dashboard provides the data you need to make informed decisions. This streamlined approach lets you confidently choose the right testing method for your campaign.

If you lack the traffic needed for multivariate testing, stick with A/B testing. But if your audience size supports it, don’t miss the chance to uncover how multiple elements interact. Align your testing strategy with your campaign's scale and goals to achieve meaningful results.

FAQs

How can I choose between A/B testing and multivariate testing for my email campaign?

Choosing between A/B testing and multivariate testing comes down to your campaign goals and how much complexity you’re ready to tackle.

A/B testing works great when you need to compare two versions of a single element - like a subject line or a call-to-action button - to figure out which one performs better. It’s straightforward, quick, and focuses on just one variable at a time, making it perfect for simpler experiments.

On the other hand, multivariate testing is better suited for examining multiple elements at once - like testing combinations of subject lines, images, and layouts. While it demands a larger audience and involves more complexity, it gives you a deeper understanding of how different components work together to impact results.

In summary, go with A/B testing for simpler, single-variable comparisons, and choose multivariate testing when you want to optimize multiple elements for maximum impact.

How large should your audience be for effective multivariate testing?

For multivariate testing to work well, you need a big enough audience to get results that are statistically reliable. Ideally, each variation in your test should reach at least 5,000 subscribers. This ensures you can gather enough data to understand how different variables interact and make informed decisions to improve your campaign.

If your audience is smaller, you might still get some useful insights, but the results may not be as reliable - especially when testing multiple combinations at once. To get the most accurate data and refine your strategies effectively, focus on reaching a larger audience.

Can you combine A/B testing and multivariate testing in a campaign strategy?

Yes, A/B testing and multivariate testing can absolutely complement each other in a campaign strategy. Here’s how they differ and work together: A/B testing compares two versions of a single element to see which performs better, while multivariate testing evaluates multiple elements simultaneously to identify the best combination.

By starting with A/B testing, you can pinpoint which individual elements - like headlines, call-to-action buttons, or images - have the greatest impact. Once you’ve identified these high-performing elements, you can move on to multivariate testing to determine how they work together as a whole.

This combination allows you to fine-tune your campaigns with precision, improving key aspects like messaging and design. Together, these methods can provide more comprehensive insights, helping you boost both engagement and conversions.