Email A/B Testing Tips for Better ROI

Email A/B testing is a powerful way to improve your email marketing results. By testing small changes - like subject lines, CTAs, or send times - you can determine what works best for your audience and boost your return on investment (ROI). Here are the key takeaways:

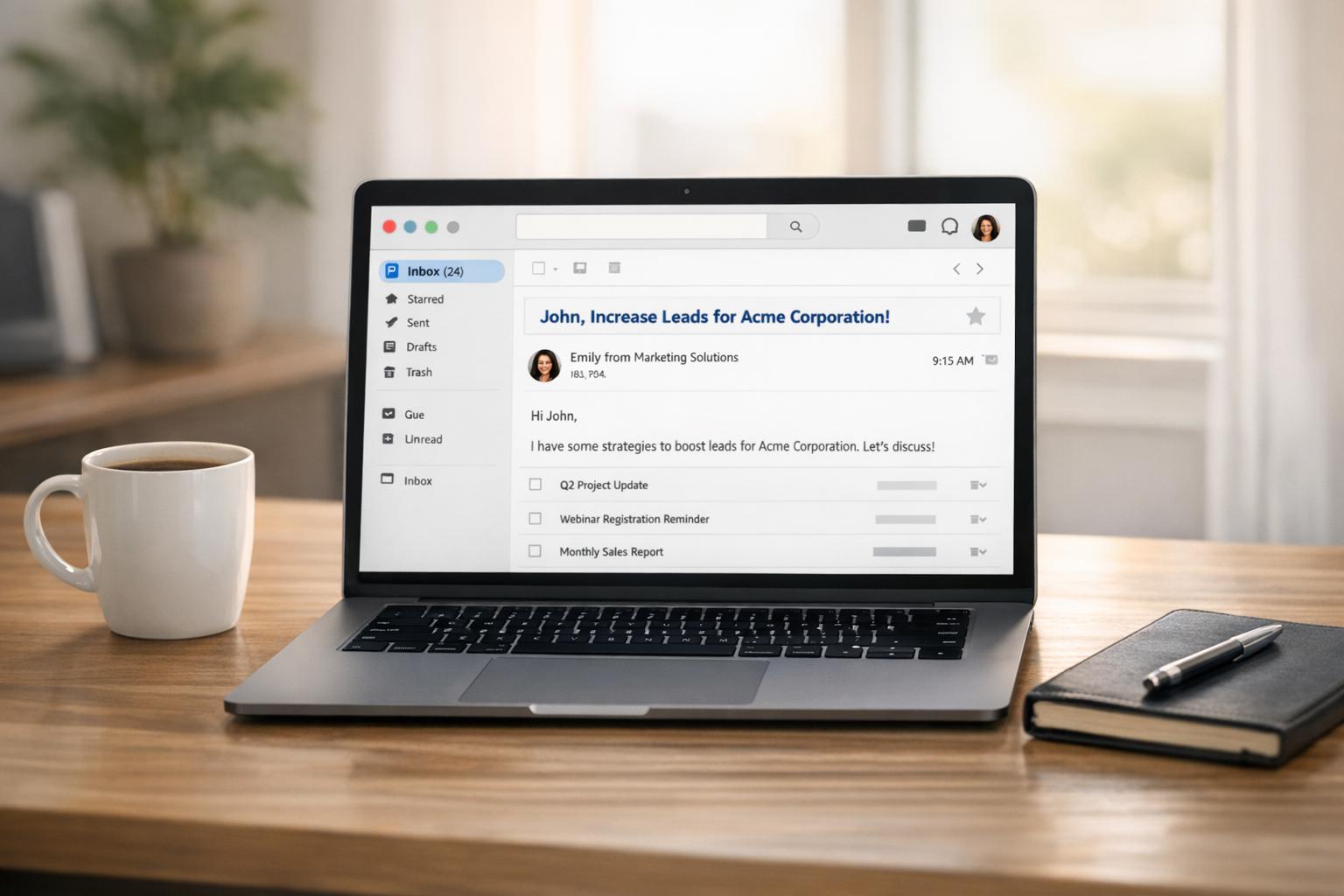

- Subject Lines: Short, personalized subject lines can increase open rates by 10–25%. For example, adding a recipient's name boosts opens by 14%.

- Call-to-Action (CTA) Buttons: Testing CTA text or design can improve click-through rates by up to 27%.

- Send Times: Optimizing when emails are sent can improve engagement by 23% or more.

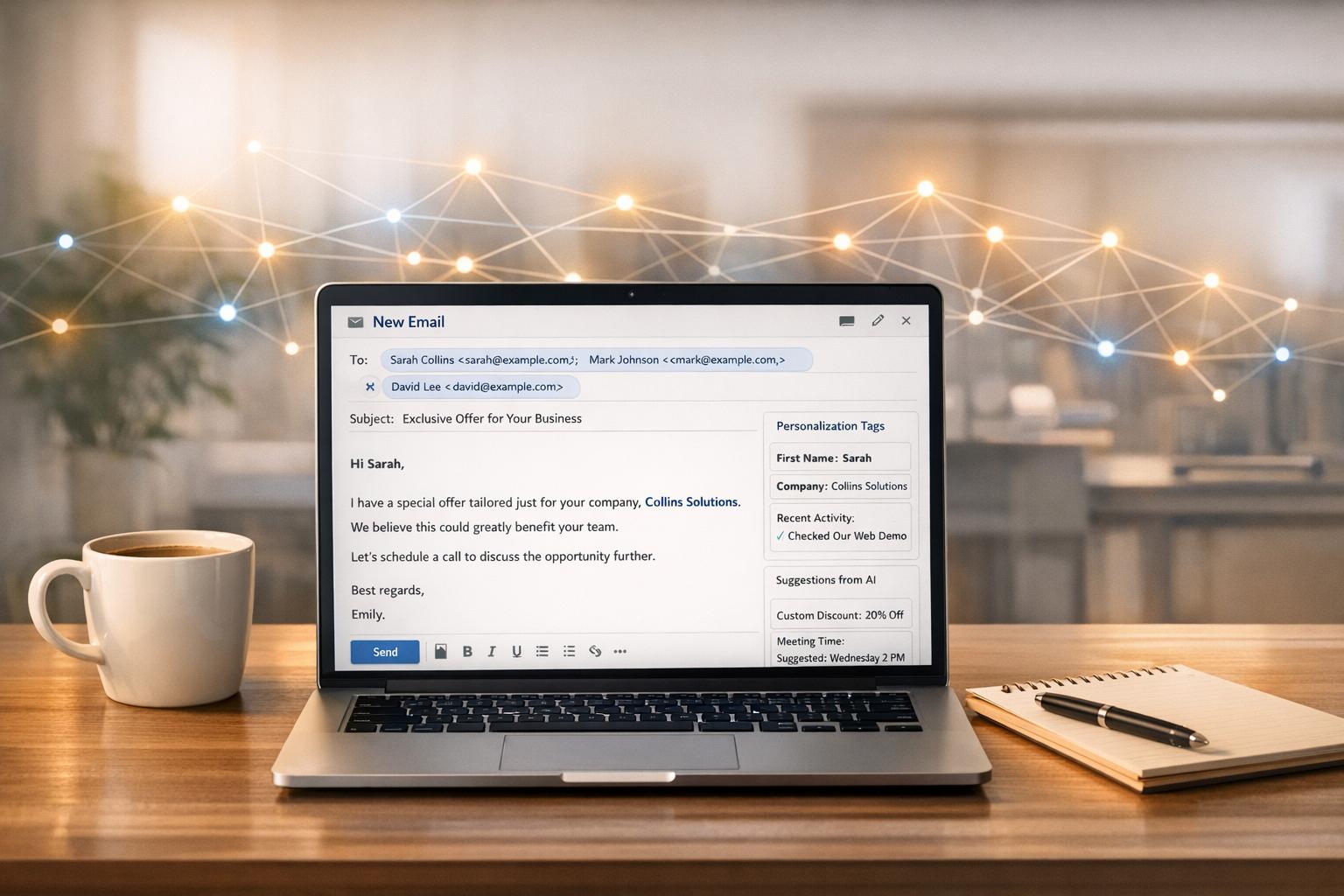

- Personalization: Tailoring content to the recipient, like using their name or location, can result in 6x higher transaction rates.

- Visuals: Testing images or plain-text designs helps identify what drives clicks and conversions.

- Copy Length and Tone: Short, action-driven copy often performs better, especially for B2C audiences.

- Segmentation: Testing within specific audience groups ensures results are relevant and actionable.

- Sample Sizes: Use at least 1,000 recipients per variation for reliable results.

- Track Revenue Metrics: Focus on revenue rather than just opens or clicks to measure true ROI.

- Real-Time Analytics: Use tools that analyze results quickly to optimize campaigns on the fly.

A/B testing isn’t just about improving metrics - it’s about making data-driven decisions to maximize engagement and revenue. By focusing on one variable at a time and using statistically significant sample sizes, you can refine your strategy and achieve consistent growth.

Email A/B Testing Impact: Key Statistics and Performance Metrics

A/B Testing Best Practices & Real-Life Examples

1. Test Subject Lines to Increase Open Rates

Think of your subject line as the gatekeeper to your email. If it doesn’t grab attention in a crowded inbox, the rest of your email might never get read. That’s where A/B testing comes in - it takes the guesswork out and shows you exactly what clicks with your audience.

Impact on ROI

Here’s the deal: better open rates mean more clicks and conversions. And in email marketing, those numbers add up fast. With an average ROI of $42 for every $1 spent, even a slight improvement in your subject line can lead to noticeable revenue growth.

Ease of Implementation

Testing your subject lines is surprisingly easy. Most email platforms let you tweak a few words in seconds without changing anything else. This makes it a quick and practical way to start optimizing your email strategy.

Relevance to Email Marketing Goals

Subject lines are all about standing out in a sea of emails. Research on 5 million subject lines found that shorter, three-word subjects had an open rate of 21.2%, compared to 15.8% for longer, seven-word ones. Testing helps you figure out if your audience prefers concise and punchy or more descriptive styles.

"A/B testing subject lines is not just a marketing tactic - it's a data-driven approach to maximizing engagement and conversions." - Alex Killingsworth, Email & Content Marketing Strategist, Online Optimism

Potential for Measurable Improvement

Subject line testing can lead to open rate improvements of 10–25% on average. A real-world example? In 2015, Twilio SendGrid tested their "From" address by comparing "Twilio SendGrid" to "Matt from Twilio SendGrid." The personalized version boosted open rates by 10%.

| Variable | Test Example A | Test Example B | Primary Metric |

|---|---|---|---|

| Length | Short (3–5 words) | Long (10+ words) | Open Rate |

| Personalization | Includes Name | No Name | Open Rate |

| Urgency | "Last Chance!" | "New Styles Available" | Open Rate |

| Tone | Formal/Professional | Casual/Witty | Open Rate |

- Length: Subject lines under 50 characters tend to perform better.

- Personalization: Including a subscriber’s name can increase open rates by over 14%.

- Urgency: Phrases like "Ends tonight" often outperform neutral alternatives.

- Tone: Try experimenting with formal versus casual language to see what resonates.

When testing, focus on one variable at a time and use a sample size of 1,000–5,000 subscribers. Send both versions simultaneously, and you’ll often see a clear winner within two hours (80% accuracy), with even better results after 12 hours.

2. Test Call-to-Action Buttons to Boost Click-Through Rates

Your call-to-action (CTA) button is where curiosity turns into action. A/B testing can help you figure out which CTA elements truly resonate with your audience and drive clicks. After refining your subject lines, focusing on your CTA is the next logical step to improve engagement.

Impact on ROI

Improving your click-through rate (CTR) can have a direct and measurable impact on your revenue. For instance, switching from a plain text link to an HTML button can increase CTR by 27%. Personalized CTAs perform even better, converting 42% more effectively than generic ones. Take Whisker, a pet care manufacturer, as an example: in 2025, they tested their email CTAs to ensure the messaging on the button aligned with their landing page. This simple adjustment led to a 107% increase in conversion rates and a 112% boost in revenue per user.

Ease of Implementation

Testing CTA buttons is surprisingly simple. You don’t need to overhaul your entire email design - just tweak one element at a time. Most email platforms allow you to experiment with button colors, text, or placement with minimal effort. To prioritize your tests, try using the ICE score - which evaluates impact, confidence, and ease. For example, changing button text from "Read more" to something more specific like "Get the formulas" can deliver significant results without requiring a full redesign.

Relevance to Email Marketing Goals

A CTA has a singular purpose: to get a click. Testing helps you uncover what motivates your audience to take that action. For example, in 2023, SE Ranking's Marketing Team Lead Tetiana Melnychenko ran an A/B test for a re-engagement campaign. She compared a plain-text email with an HTML version featuring an embedded Typeform. The HTML version increased conversion rates from 1.4% to 8.3%. On the flip side, Michael Alexis, CEO of Team Building, found that a simple plain-text email outperformed a polished HTML version in a B2B seasonal campaign, doubling the click-through rate.

"A/B testing is great because it informs and affirms decisions with data and helps you adjust your approach so you're getting the right results." - Michael Alexis, CEO, Team Building

Potential for Measurable Improvement

Small changes to your CTA can lead to noticeable gains. Action-oriented or benefit-focused button copy can boost CTR by around 10%. Systematic testing of email elements often results in 10–25% improvements in key metrics. For example, Campaign Monitor saw a 127% increase in click-throughs after testing various email components, including CTA button text. To make your tests effective, focus on one variable at a time - whether it’s the button’s color, text, placement, or design style - so you can clearly measure the impact of each change.

| CTA Element | Testing Variable Examples | Primary Metric to Track |

|---|---|---|

| Copy | "Shop Now" vs. "Get 20% Off" | Click-Through Rate (CTR) |

| Design | HTML Button vs. Hypertext Link | Click-to-Open Rate (CTOR) |

| Placement | Above the fold vs. Bottom | CTR / Conversion Rate |

| Color | Brand Blue vs. High-Contrast Orange | CTR |

| Quantity | One primary button vs. Three category links | Conversion Rate / Revenue |

For optimal performance, ensure your CTA buttons are built in HTML so they display correctly even when images are disabled. Don’t forget about mobile users - since 55–65% of emails are opened on mobile devices, your buttons should be large enough to tap easily on a smartphone.

3. Test Send Times to Reach Subscribers When They're Most Active

When it comes to email marketing, timing can make or break your campaign. Even the most engaging content can go unnoticed if it lands in your subscriber's inbox at the wrong time. Testing and optimizing your send times can improve performance by up to 23% - a difference that directly impacts your bottom line. Just like fine-tuning subject lines or calls-to-action (CTAs), finding the perfect send time is a critical step in maximizing your ROI.

Impact on ROI

Fine-tuning send times can significantly boost your email marketing results, especially when paired with optimized subject lines and CTAs. In fact, send-time testing has been shown to improve email ROI by anywhere from 35% to 60%. Why? Because sending emails when your audience is most likely to engage leads to higher open rates, more clicks, and ultimately, more conversions.

Take OneRoof, a property platform in New Zealand, as an example. By using AI to determine the best send times for individual users, they achieved a 23% increase in click-to-open rates, a 57% jump in unique clicks, and an impressive 218% rise in total clicks to property listings. Similarly, foodora, a food delivery service, tested send-time optimization during its onboarding campaigns in Austria. The results? A 9% increase in click-through rates, a 41% boost in conversion rates, and a 26% drop in unsubscribe rates.

Ease of Implementation

The good news? Testing send times is one of the simplest adjustments you can make. Most email platforms now offer built-in A/B testing tools, so you don’t need to create new graphics or overhaul your email design. All it takes is scheduling the same email at different times and analyzing the results.

Here’s a straightforward way to get started:

- First, test different days of the week (e.g., Tuesday vs. Thursday) to identify the best day for engagement.

- Next, test various times of day (e.g., 8:00 AM, 11:00 AM, 3:00 PM, 7:00 PM) to pinpoint the optimal hour.

For an extra edge, avoid sending emails at round times (like exactly 9:00 AM) to sidestep delays caused by high ISP traffic.

Relevance to Email Marketing Goals

The best send time depends heavily on your audience and industry. For instance:

- B2B audiences are typically most active during work hours, especially Tuesday through Thursday between 8:00 AM and 10:00 AM, when professionals are planning their day.

- B2C audiences often engage more in the evenings (5:00 PM to 7:00 PM) and on weekends. Recent data even shows email open rates peaking at 59% around 8:00 PM and 40% at 11:00 PM.

For global campaigns, scheduling emails to arrive around 9:00 AM local time ensures your message appears at the top of the inbox when recipients start their day.

"A morning campaign might see a 30% open rate, while the same message in the afternoon barely gets noticed. But those differences aren't about the clock - they're about the customer." – Team Braze

Potential for Measurable Improvement

Even small tweaks to send times can yield big results. For example, AI-powered tools can increase click-through rates by 13% and boost revenue by 41% compared to traditional methods. However, success isn’t just about open rates. A send time that generates fewer opens but leads to more conversions is the real winner when it comes to ROI.

Here’s how long you should wait to measure results accurately:

- For open rates, a 2-hour window is often enough to predict the top performer with over 80% accuracy.

- For revenue-focused tests, wait at least 12 hours for 80% accuracy or 24 hours for 90% accuracy.

- Running tests over a full business cycle (7–14 days) helps account for day-of-the-week patterns and other external factors.

| Metric to Optimize | Recommended Wait Time for 80% Accuracy | Recommended Wait Time for 90% Accuracy |

|---|---|---|

| Open Rates | 2 Hours | 12+ Hours |

| Click Rates | 1 Hour | 3+ Hours |

| Revenue | 12 Hours | 24 Hours |

(Source: Mailchimp analysis of 500,000 A/B tests)

Next, we’ll dive into how personalization can take your email marketing ROI to the next level.

4. Test Different Levels of Personalization

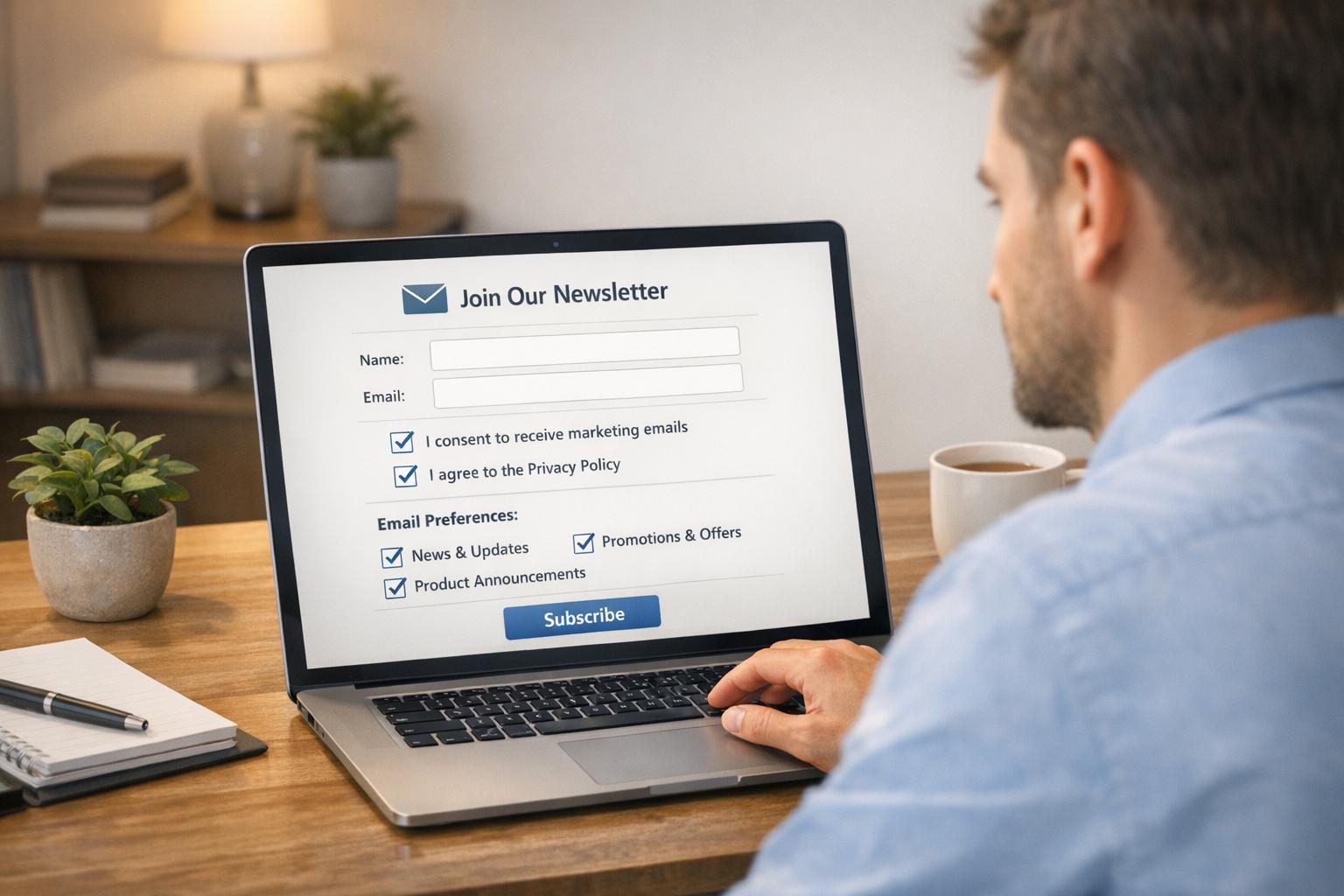

Once you've optimized your send times, the next step to boost engagement and ROI is tweaking your personalization strategy. And no, personalization isn't just about popping a subscriber's name into your email. It spans multiple levels - from basic details like name or company to more advanced approaches like tailoring content based on industry trends, browsing history, AI-driven predictions, or even real-time data insights. Testing these different levels helps you figure out what resonates most with your audience, unlocking better results.

Impact on ROI

The stats are hard to ignore: personalized emails deliver six times higher transaction rates compared to generic ones. For example, just adding a subscriber's name to the subject line can lift open rates by 26%. Taking it a step further by personalizing the body text with details like their company name or location can boost click-through rates by over 14%. Campaign Monitor even experimented with dynamic images that updated based on a subscriber's location (think New York, Sydney, or London), leading to a 29% jump in click-through rates.

Even small tweaks can deliver big wins. In October 2025, Whisker, a pet care brand, aligned its email messaging with its website content. The result? A 107% spike in conversion rates and a 112% increase in revenue per user. Similarly, HubSpot tested using a personalized sender name ("Angie at OptinMonster") instead of a generic company name. This minor change led to a 0.53% higher open rate and a 0.23% lift in click-through rates, generating 131 additional leads.

Ease of Implementation

If you're new to personalization, start small and build from there. One of the simplest adjustments is changing the "From" name to a real person instead of your brand name. Once that's in place, experiment with subject lines that include the recipient's name, then move on to dynamic content blocks that adjust based on location or purchase history.

To get accurate insights, change only one element at a time. For instance, if you're testing subject lines, just add the recipient's name and keep everything else the same. This way, you can pinpoint what caused the performance shift. Additionally, tailor your tests to specific audience segments - what works for loyal, high-value customers might not click with first-time buyers.

Relevance to Email Marketing Goals

Personalization helps emails stand out in crowded inboxes. Whether it's tweaking subject lines, using dynamic images, or offering location-based deals, these strategies directly impact clicks, conversions, and revenue.

"The subscriber's name is the single most impactful word you can add to your subject line, increasing opens by over 14%." – Campaign Monitor

This underscores how even small personal touches can make a big difference.

Potential for Measurable Improvement

To measure the success of your personalization tests, focus on the right metrics. For instance, monitor open rates when testing subject lines or sender names. For changes in body copy, images, or offers, track click-through rates. And don’t stop at engagement metrics - keep an eye on transaction rates and revenue. After all, an email that drives fewer opens but results in more purchases is a clear winner.

| Personalization Variable | Primary Metric | Expected Goal |

|---|---|---|

| Subject Line (Name/Location) | Open Rate | Increase Inbox Visibility |

| Sender Name (Person vs. Brand) | Open Rate | Build Trust |

| Dynamic Images (Location/Interest) | Click-Through Rate | Improve Relevance |

| Product Recommendations | Conversion Rate/Revenue | Drive Purchases |

| Body Copy (Company/Past Behavior) | Click-to-Open Rate | Increase Engagement |

5. Test Image Types to See What Drives Conversions

Once you've tailored your email content through personalization, it's time to focus on visuals. Did you know the human brain processes images 60,000 times faster than text? That means the right image can make or break your email's performance. Testing different styles of visuals helps you figure out what truly connects with your audience.

Impact on ROI

Images can have a powerful effect on conversion rates, but they don't always work the way you'd expect. Take 2023, for example - Curt Nichols, founder of Glade Optics, ran an experiment comparing traditional marketing emails with flashy product photos to plain-text emails that mimicked personal messages. Surprisingly, the plain-text emails, stripped of visual distractions, drove higher engagement. This proves that sometimes simplicity outshines elaborate visuals, especially if images pull attention away from your call-to-action.

Even small improvements in click-through rates can translate into big revenue gains. Considering email marketing typically delivers an ROI of $36 to $42 for every $1 spent, optimizing visuals is well worth the effort. The challenge lies in finding that sweet spot where your visuals enhance your message instead of overshadowing it.

Ease of Implementation

Start simple: test one visual element at a time. For example:

- Compare a clean product photo against a lifestyle image showing the product in action.

- Experiment with an animated GIF versus a static hero image to see if motion grabs more attention.

Keep everything else - like subject lines and copy - consistent during these tests to isolate the impact of the visuals. And don’t forget mobile users! Compress your images using tools like tinypng.com and include alt text to ensure accessibility.

Relevance to Email Marketing Goals

Different types of images serve unique purposes. A product photo might highlight specific features, while a lifestyle image can evoke emotions and help readers picture themselves using the product. Similarly, testing illustrations versus standard photography could reveal which style resonates better with your audience and aligns with your brand's identity.

"The right image selection can increase engagement with your recipients. Make sure your images reflect your message and your brand." – Twilio SendGrid

Potential for Measurable Improvement

Track the metrics that matter most for your campaign. When testing visuals like hero images or GIFs, monitor click-through rates, click-to-open rates, and, most importantly, conversions and revenue. Sometimes, an image that attracts fewer clicks might still drive more purchases, making it the real winner. A/B testing can improve key metrics by 10-25%, and running tests for up to 24 hours often delivers 90% accuracy in gauging revenue impact.

With these insights into visual optimization, you're set to dive into testing copy length and tone in the next section.

6. Test Copy Length and Tone to Keep Readers Engaged

Once you've fine-tuned visuals and CTAs, it's time to turn your attention to email copy. The words you choose can make or break your campaign - either enticing readers to engage or prompting them to hit delete. The key is understanding what resonates with your audience and aligns with your message.

Impact on ROI

A well-crafted tone can do wonders for your conversions. For example, using a positive tone has been shown to boost conversion rates by 22%. Considering that email marketing delivers an impressive $42 return for every $1 spent, even small tweaks to your copy can translate into meaningful revenue gains. By testing different copy variations, you can directly influence how readers respond, making this a practical and highly impactful area to optimize.

Ease of Implementation

Testing copy is quick and simple. Small changes - like swapping out words, adjusting tone, or shortening text - can be done in just minutes. Start with one variable at a time. For instance, compare a concise, punchy email to a more detailed version, or experiment with a conversational first-person tone versus a formal third-person style. Keep everything else consistent - subject line, CTA, and visuals - so you can pinpoint exactly what drives the difference in performance.

Relevance to Email Marketing Goals

Different audiences have different expectations. B2B readers often prefer detailed, text-heavy emails, while B2C audiences typically respond better to visually engaging emails with minimal, snappy copy. Testing allows you to uncover whether your audience wants the full story or just the highlights. With the average recipient spending less than 11 seconds on an email, your copy needs to grab attention instantly.

Potential for Measurable Improvement

Like other A/B tests, tweaking your copy can yield measurable results. On average, A/B testing improves metrics by 10–25%, and even small copy adjustments can lead to a 1–2% increase in sales. Focus on actionable, benefit-driven language to boost engagement. For example, phrases like "Get the formulas" tend to perform better than generic prompts like "Read more". Keep an eye on metrics like click-through rates, conversions, and overall revenue to determine whether shorter, action-driven copy or longer, detailed messaging works best for your goals.

sbb-itb-8889418

7. Segment Your Audience Before Running Tests

After testing subject lines, CTAs, and copy, it’s time to take things a step further by segmenting your audience. Why? Because testing with a broad, undifferentiated list can lead to skewed results. Different subscriber groups react differently, and segmenting ensures your tests are more precise. This approach doesn’t just sharpen your strategy - it also drives better ROI.

Impact on ROI

Segmented testing has the power to significantly influence your revenue. By tailoring tests to specific groups, you can make sure the winning variant actually works for your most valuable customers. For example, a variant that seems like a winner overall might underperform with your high-spending customers. Segmenting helps you optimize for real revenue growth, not just surface-level metrics like clicks or opens.

Ease of Implementation

The good news? Most email platforms make segmentation simple. You can split your audience into "A" and "B" groups based on factors like behavior, purchase history, or demographics. The key is to find the right balance - your segments should be specific enough to be meaningful, but still large enough to provide reliable data. Aim for groups of 1,000–5,000 subscribers. For even larger lists, try the 25% rule: test on 25% of the segment, then send the winning version to the remaining 75%.

Relevance to Email Marketing Goals

Segmentation can directly impact your core marketing objectives.

"Different groups respond differently. What works for new subscribers might fail with loyal customers." - Monday.com

By testing within segments, you can uncover what resonates most with each group. For instance, high-value customers might respond better to exclusive offers, while occasional buyers may prefer bigger discounts. Plus, better engagement from targeted emails can improve your deliverability rates. With 39% of brands skipping segmented email tests altogether, there’s a huge opportunity to stand out.

Potential for Measurable Improvement

Segmented A/B tests deliver actionable insights. For example, using dynamic images tailored to a subscriber’s location increased click-through rates by 29%. Even small tweaks, like including a subscriber’s name in the subject line, can lift open rates by over 14%. Analyzing results by segment - even after a broader test - helps ensure your winning variant truly connects with every group.

8. Use Large Enough Sample Sizes for Reliable Results

When it comes to A/B testing, the size of your test group can make or break the accuracy of your results. Small sample sizes often lead to misleading conclusions, with "winners" that may not actually perform well in the real world. These so-called "fake winners" can cost you revenue when implemented across your entire audience because their apparent success is often just random chance.

Impact on ROI

Getting your sample size right is crucial for protecting your revenue. If your test group is too small, you might end up choosing a variant that doesn’t perform well. Email marketing expert Jeanne Jennings emphasizes this point:

"Sample sizes that are way too small to detect a meaningful difference, even when one exists... understanding how to calculate your ideal sample size isn't just nice, it's non-negotiable".

For example, if your email typically converts at a 2% rate, you'll need about 20,000 recipients per variation (40,000 total) to confidently detect a 20% improvement with 95% certainty. This makes calculating the right sample size a must before rolling out your campaign.

Ease of Implementation

Luckily, figuring out the right sample size doesn’t have to be complicated. Free online tools like Evan Miller’s calculator or Optimizely can help you determine your ideal sample size. A good rule of thumb is the "20,000 Rule" - each variation should be tested on at least 20,000 subscribers to uncover meaningful differences. If your email list is too small to meet this threshold, you can pool data from multiple sends. For instance, you might test the same subject line style across several weekly newsletters to gather enough data for reliable insights .

Relevance to Email Marketing Goals

Unlike website tests, where new visitors can continuously contribute to your sample, email campaigns are sent to a fixed audience. This means careful planning is essential. The industry standard for statistical confidence is 95%, meaning there’s only a 5% chance that your results are due to random variation. Since about 85% of email interactions happen within the first 24 hours of sending, you’ll quickly know if you’ve gathered enough data. For mid-sized lists (10,000–50,000 subscribers), splitting your audience 50/50 between variations can help maximize your sample size. If your list is smaller, aggregating results over multiple campaigns is a smart way to reach reliable conclusions.

Potential for Measurable Improvement

Using proper sample sizes ensures you don’t waste resources on ineffective strategies. Take Brava Fabrics, for example. In October 2024, they tested three sign-up offers: a 10% discount, a $300 contest entry, and a $1,000 contest entry. Thanks to a large enough test group, they discovered that all three offers performed equally well. This insight allowed them to choose the contest offer over a universal discount, saving their marketing budget while maintaining the same conversion rate. Without an adequate sample size, they might have missed this valuable insight. In essence, statistical significance acts as your truth detector, helping you distinguish real trends from random noise.

9. Track Revenue Metrics, Not Just Opens and Clicks

Impact on ROI

Once you've perfected your subject lines, CTAs, and personalization, the next big step is to measure what truly matters: your return on investment (ROI). While open rates and clicks can tell you how engaged your audience is, they don’t paint the full picture of profitability. Sometimes, the email variant that gets more clicks doesn’t translate into more conversions. Take the example of fashion retailer River Island. In October 2025, they shifted their A/B testing strategy to focus on revenue-based outcomes rather than just engagement metrics. By tweaking their sending schedule and content types with revenue as the key metric, they saw a 30.9% boost in revenue per email and a 30.7% rise in orders per email. This shift from tracking clicks to focusing on revenue metrics turned earlier tests into real business growth.

Ease of Implementation

Linking your email platform to sales data is the first step toward tracking revenue metrics effectively. Start by calculating Revenue Per Email (RPE) - divide your total revenue by the number of emails sent. Use this figure to compare performance across different email variants and pinpoint the one driving the highest revenue. Another crucial metric is Average Order Value (AOV), which you can calculate by dividing total revenue by the number of orders.

"Tracking AOV clearly shows which version is better for your bottom line. Without factoring in AOV, you risk choosing the wrong winner".

Interestingly, the email that gets fewer clicks might actually generate more revenue if it delivers a higher AOV.

Relevance to Email Marketing Goals

The simplicity of implementing revenue metrics leads to a bigger question: why prioritize them over traditional engagement metrics? While 59% of marketing teams still lean on opens and clicks, a growing number are shifting toward revenue-focused strategies. For instance, 41% of B2B marketers now tie their campaigns directly to revenue, while 44% monitor Marketing Qualified Leads (MQLs) and conversion rates as their primary success metrics. Opens and clicks are helpful, but revenue attribution captures the true impact of your campaigns. After all, email marketing delivers an impressive ROI of $36 to $42 for every $1 spent. Ian Donnelly, Senior Content Marketing Manager at Bloomreach, sums it up well:

"A subject line that improves open rates by 2% might be statistically valid, but that doesn't mean it moves the needle on conversions, revenue, or long-term customer engagement".

Potential for Measurable Improvement

Focusing on revenue metrics can uncover opportunities that traditional engagement data often overlooks. In 2025, pet care brand Whisker tested personalized messaging throughout their customer journey, aiming to align email content with their website experience. The result? A 112% increase in revenue per user and a 107% lift in conversion rates. To get a complete view of your business growth, monitor metrics like Total Revenue, AOV, Revenue per Visitor, and Lifetime Value. These insights reveal which strategies truly drive growth - beyond just grabbing attention.

10. Use Real-Time Analytics to Improve Tests Over Time

Impact on ROI

Real-time analytics can be a game-changer for your testing process, especially when your focus is on revenue metrics. By speeding up the testing period, these tools help you quickly identify high-performing variations and phase out weaker ones before your campaign window closes. For instance, Mailchimp’s analysis of 500,000 A/B tests revealed that click data reaches 90% predictive accuracy within just 3 hours, while open rates take over 12 hours to hit the same benchmark. This is crucial because over 50% of emails are opened within the first six hours of being sent. With this kind of speed, you can roll out the winning version to the majority of your audience sooner, ensuring that more people see optimized content rather than splitting traffic evenly between test variants.

Ease of Implementation

Most popular email platforms now come equipped with real-time dashboards that make implementation straightforward. These dashboards track the confidence levels of your test results and can automatically deploy the winning variation once a pre-set confidence threshold - often 95% - is reached. For campaigns that are time-sensitive, focusing on click-through rates as your primary success metric is a smart move since this data stabilizes faster than revenue metrics. If revenue is your key focus, you’ll need to allow at least 12 hours for 80% accuracy or 24 hours for 90% accuracy. Configuring your tools to switch to the best-performing variant after your chosen timeframe ensures that your audience sees the most effective content as quickly as possible. This approach builds on previous optimization strategies, enabling faster and more effective campaign adjustments.

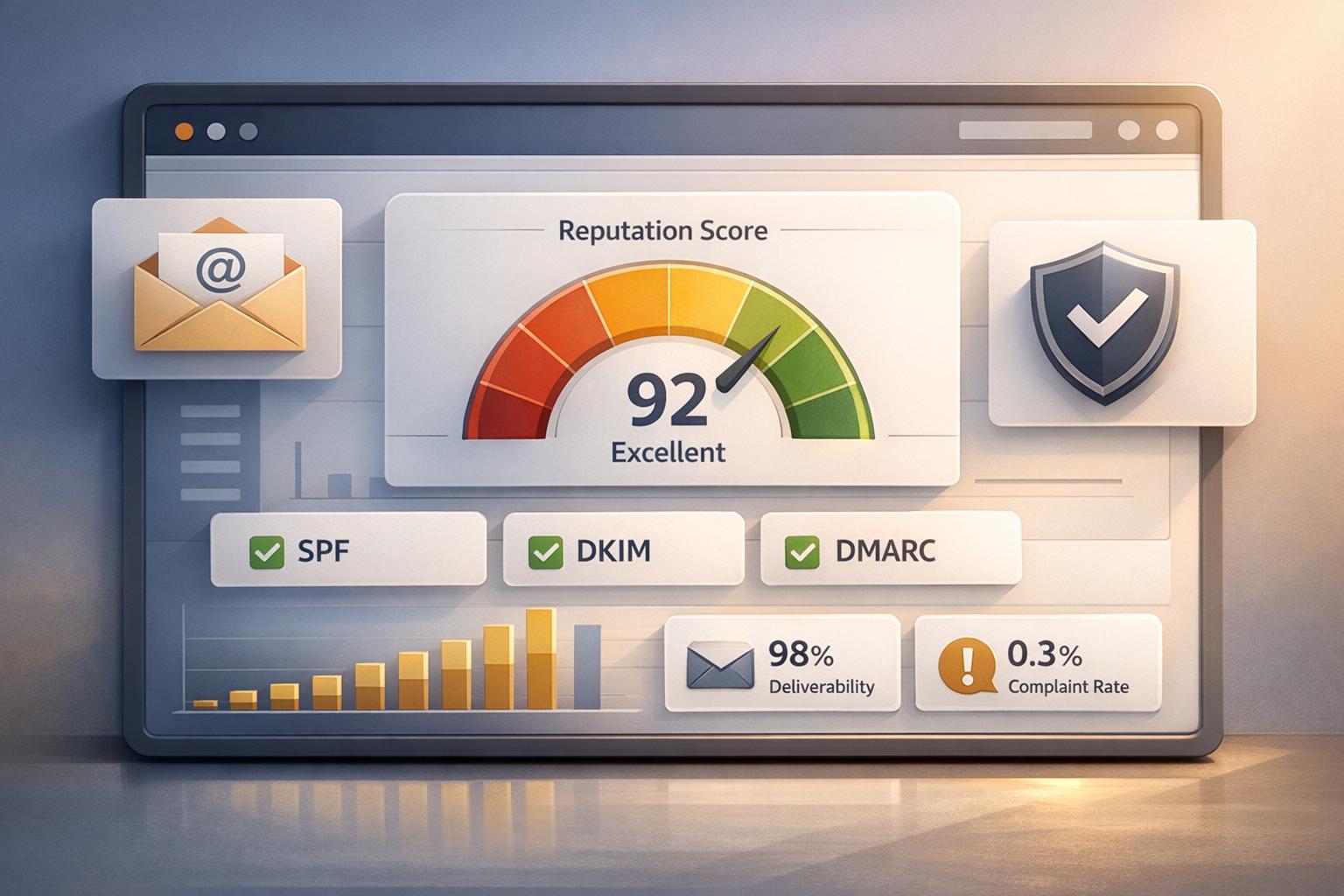

Relevance to Email Marketing Goals

Real-time monitoring doesn’t just improve performance - it also safeguards your sender reputation. By catching issues like broken links or rendering errors early, you can prevent these problems from affecting your entire mailing list. Beyond troubleshooting, real-time data is a powerful ally for revenue-driven campaigns. Many advanced platforms now use AI to analyze individual recipient behavior during tests, allowing them to deliver the best variation for each subscriber rather than relying on a one-size-fits-all approach. This kind of personalization ensures that even smaller audience segments - like the 30% who might prefer an alternate variation - receive content that resonates with them. Leveraging real-time data in this way supports continuous improvement, which is critical for running high-performing campaigns.

Potential for Measurable Improvement

By combining speed and precision, real-time analytics can significantly boost your campaign results. They not only help you identify winning variants faster but also create valuable feedback loops that refine future tests and enhance overall conversion rates. This iterative process ensures your campaigns consistently improve over time.

Sample Size and Confidence Level Requirements

When conducting A/B tests, having enough participants is critical to ensure reliable results. Testing with too few subscribers can lead to misleading outcomes. For dependable results, aim for at least 1,000 recipients per variation. However, the exact number depends on several factors, including your test parameters and the smallest improvement you want to detect. To calculate the required sample size, you’ll need four key inputs: your baseline conversion rate (like your current open rate), the minimum improvement you want to identify (known as the Minimum Detectable Effect), your desired confidence level (commonly 95%), and statistical power (usually 80%).

A 95% confidence level is the industry standard, meaning there’s only a 5% chance that your results are due to random variation. For example, if your baseline open rate is 40% and you’re aiming for a 20% relative improvement, you’ll need 592 subscribers per variation (1,184 total). If your goal is to detect a 10% improvement, that number jumps to 2,315 subscribers per variation.

Here’s a breakdown of the subscriber requirements based on different target improvements:

| Relative Improvement | Sample Size Per Variation | Total Subscribers Needed |

|---|---|---|

| 10% | 2,315 | 4,630 |

| 20% | 592 | 1,184 |

| 30% | 268 | 536 |

| 50% | 94 | 188 |

These calculations assume a 40% baseline open rate and a 95% confidence level.

It’s worth noting that only about 1 in 8 A/B tests produce statistically significant results. This makes proper sample sizing a critical step in ensuring your tests yield actionable insights and help drive meaningful ROI. By following these guidelines, you can increase the reliability of your A/B testing outcomes.

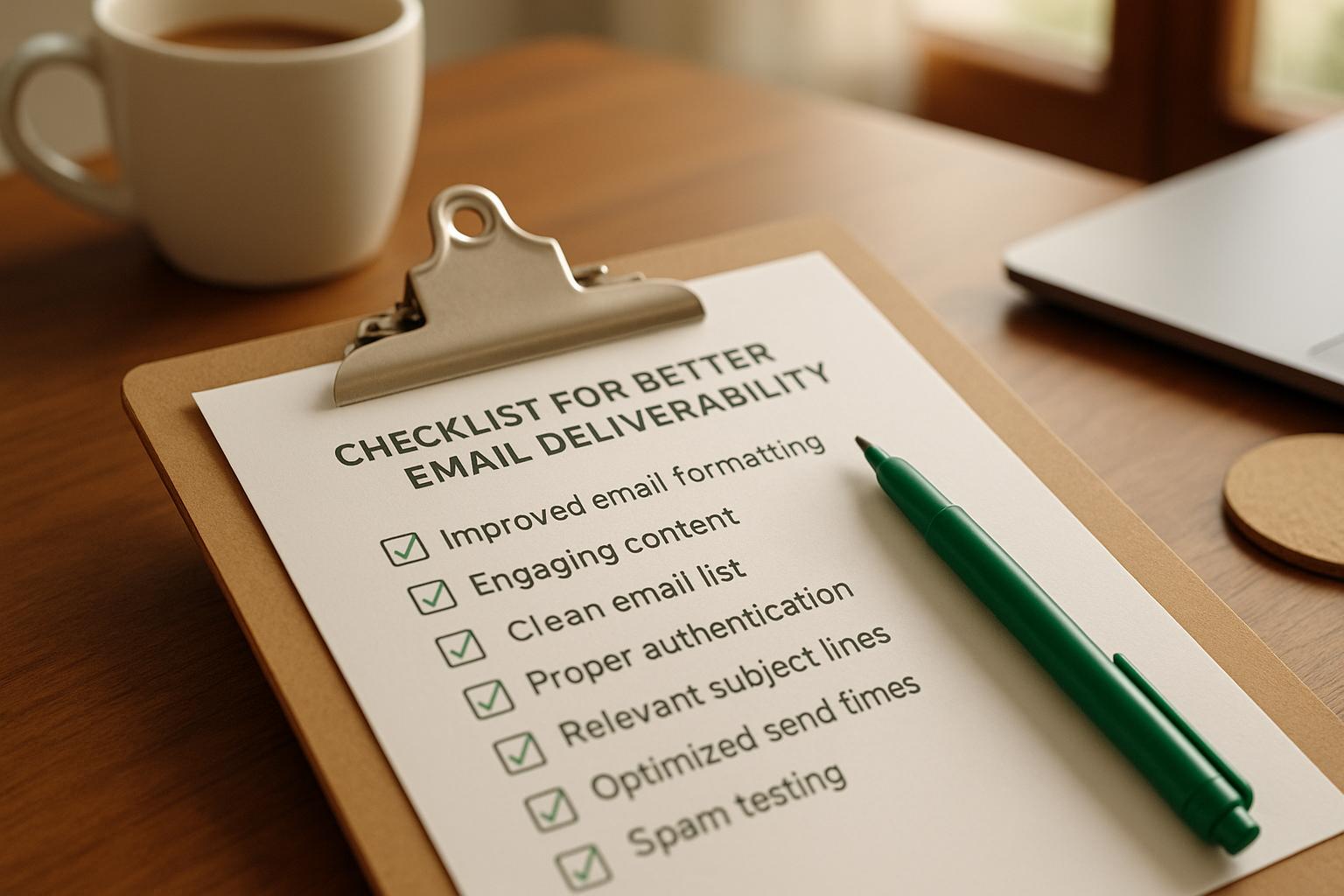

How Breaker Supports A/B Testing

Breaker makes A/B testing simpler and more effective for B2B marketers by offering an all-in-one platform. Its real-time analytics dashboard lets you track metrics like opens, clicks, and click-through rates as they happen, helping you quickly pinpoint which variation is performing better.

With these real-time insights, Breaker’s audience segmentation tools take things a step further. You can customize tests using subscriber data such as purchase history, demographics, interests, or even geographic location. For example, you could compare how new subscribers respond versus long-time customers, ensuring your test groups are balanced in behavior and demographics. This eliminates bias and leads to more trustworthy results.

Breaker also ensures your emails reach their audience as intended. Its deliverability tools catch formatting errors and display issues across different email clients and mobile devices. On top of that, the platform automatically calculates statistical significance, so you’ll know when your results are backed by solid data instead of random chance.

When it comes to pricing, Breaker offers two plans:

- Starter Plan: $200/month for up to 50,000 sends

- Custom Plan: $1,750/month for up to 250,000 sends

Both plans include unlimited email validations, CRM integrations, deliverability management, and white-glove support. These features ensure your A/B tests generate actionable insights, helping you get the most out of your email marketing efforts.

Conclusion

Email A/B testing transforms guesswork into actionable insights, helping you make data-driven decisions that fuel consistent growth. Each test sheds light on your audience's preferences, paving the way for measurable improvements.

"A/B testing isn't about running endless experiments - it's about building a system that consistently delivers better results".

With email marketing delivering an average ROI of $36 to $42 for every $1 spent, the potential is undeniable. Regular testing can boost key metrics like open rates, click-throughs, and conversions by 10% to 25%. Yet, 39% of brands overlook this opportunity, leaving untapped potential on the table.

To see the biggest impact, prioritize testing high-leverage elements like subject lines and CTAs. These areas often yield the most significant gains in engagement and revenue. Stick to testing one variable at a time, aim for 95% statistical confidence, and document your findings to guide future experiments. Focus on metrics that matter - conversion rates and qualified leads - rather than vanity metrics like open rates.

As audience preferences evolve, your strategy should, too. What resonated six months ago might not be effective today. Regular testing ensures your campaigns stay relevant and aligned with shifting behaviors. Over time, these small, data-informed adjustments build on each other, driving substantial, long-term growth.

FAQs

How do I calculate the right sample size for an email A/B test?

To figure out the right sample size for your email A/B test, start by pinpointing the metric you want to track - whether it’s the open rate, click-through rate, or conversion rate. Then, check your recent campaigns to find the current baseline for that metric. Decide how much improvement you want to detect (for example, a 5% increase) and stick to standard statistical settings like a 95% confidence level and 80% power.

Once you have these details, plug them into a sample size calculator or use tools like Breaker’s A/B testing feature to calculate how many recipients you’ll need for each group. Make sure your email list is big enough to hit the required sample size. If it’s not, you have options: you can extend the test duration, group similar audience segments together, or tweak your target improvement. After calculating, split your audience evenly into test groups and let the test run until you’ve reached the necessary sample size for reliable results.

What are the best elements to test in email campaigns to boost ROI?

To get the most out of your email campaigns, focus on testing elements that influence opens, clicks, and conversions. The key is to test one variable at a time, so you can pinpoint exactly what works best for your audience.

Here are some areas worth experimenting with:

- Subject line and preheader text: Even small changes - like adding an emoji or tweaking the phrasing - can lead to noticeable improvements in open rates.

- Call-to-action (CTA): Play around with different text, colors, sizes, or placements to see what drives more clicks.

- Send time and day: Try sending emails at various times (like weekday mornings vs. weekend afternoons) to discover when your audience is most responsive.

- Personalization: Adding the recipient’s name or tailoring content to specific audience segments can make your emails feel more relevant and increase engagement.

By systematically testing these factors, you’ll gain insights into what resonates with your audience and fine-tune your approach to achieve better ROI.

How does email personalization improve engagement and drive revenue?

Personalization in email marketing is all about crafting content - like subject lines, messages, offers, and even timing - that aligns with each recipient's preferences, behaviors, and needs. Instead of sending out generic emails, this approach creates meaningful, one-on-one interactions that feel relevant and worthwhile to the reader.

When emails are personalized, the results speak for themselves. Metrics such as open rates, click-through rates, and conversions often see noticeable improvements. By using A/B testing to experiment with personalized elements, marketers can discover what truly connects with their audience, leading to stronger engagement and better outcomes.

For B2B marketers, Breaker makes personalization a breeze. With features like audience segmentation, real-time analytics, and an easy-to-use newsletter builder, creating tailored campaigns becomes simpler. These tools not only enhance engagement but also drive measurable revenue growth.