Ultimate Guide to Email Personalization Testing

Email personalization is a game-changer for B2B marketing, helping you deliver tailored messages that resonate with your audience. But how do you know if your personalization efforts are working? Testing is the key.

Why test email personalization?

- Avoid assumptions that could harm engagement.

- Discover what works best for your audience, like subject lines or dynamic content.

- Boost open rates, click-through rates, and revenue per recipient.

Quick Stats:

- Personalized emails achieve 26%+ open rates (vs. 21% industry average).

- Click-through rates hit 3.5–4% (vs. 2.6% average).

- Personalized CTAs perform 202% better than generic ones.

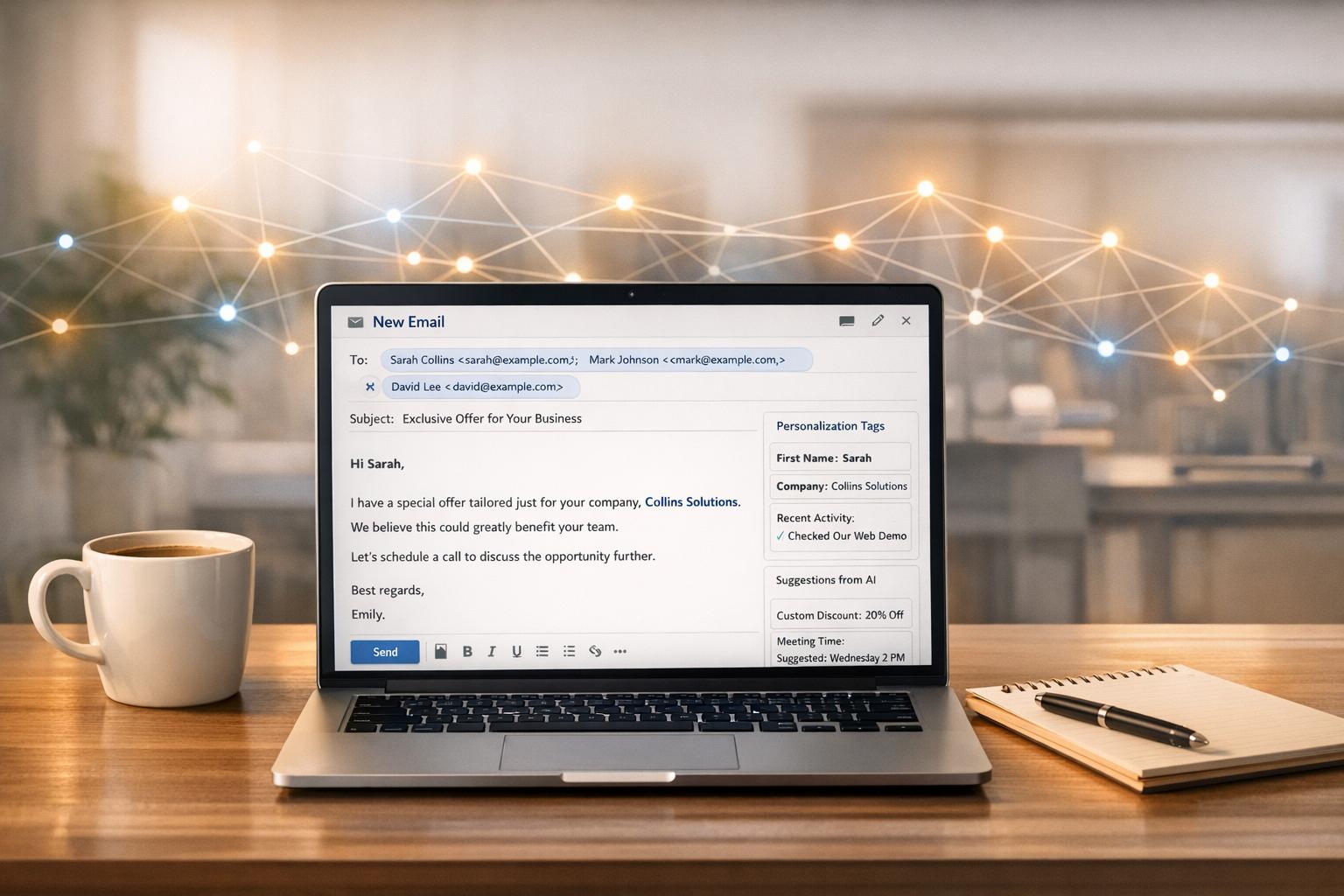

By testing elements like subject lines, dynamic content, and CTAs, you can refine your strategy and drive measurable results. Tools like Breaker simplify this process with automation and real-time analytics, making testing easier and more effective.

The key is to test systematically, isolate one variable at a time, and analyze results to continuously improve your campaigns. Let’s dive into the details.

SFMC How to personalize email || How to test email

What to Test in Email Personalization

When it comes to email personalization, testing is the secret sauce for driving better engagement and results. Focus on subject lines, dynamic content, and CTAs to see what resonates most with your audience. Here's a closer look at these elements.

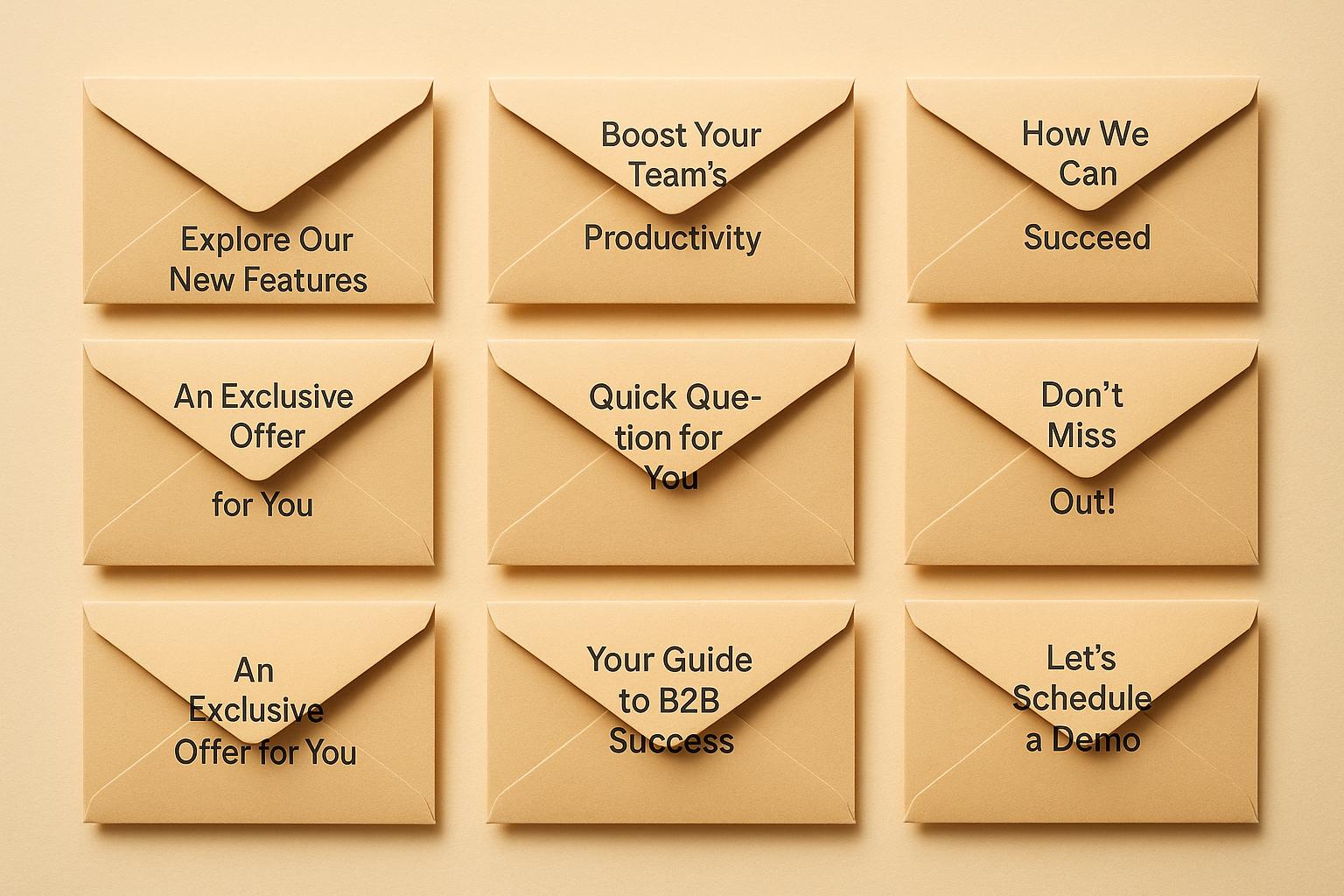

Subject Lines and Preheader Text

Subject lines are your email’s handshake - it’s what gets people to open the door. Testing different approaches here can make a big difference. For instance, research shows that including a recipient's company name in the subject line can boost open rates by 12%. Pair this with preheader text to strengthen your chances even more. Try experimenting with personalization, like using the recipient’s name, industry, or even a specific challenge they face. This helps you figure out what grabs attention and encourages clicks.

Dynamic Content in Email Body

Dynamic content transforms a generic email into something that feels personal. Test elements like tailored product recommendations, industry-specific messages, or even customized case studies. For example, adding industry-specific case studies has been shown to increase click-through rates by 20%. To get the most out of this, segment your audience by factors like industry, job role, or behavior. Interactive features, such as polls or personalized quizzes, can also boost engagement while giving you valuable data for future campaigns.

Calls to Action (CTAs)

Personalized CTAs can outperform generic ones by a staggering 202%. Test different aspects like wording, design, and placement to find the sweet spot. For example, instead of a generic "Learn More", try something like "Get Your Customized Toolkit" to make the action feel more relevant. You can also tweak button color, size, and style - just remember to change one variable at a time so you can pinpoint what works best.

| Testing Element | Industry Benchmark | Personalized Performance | Improvement |

|---|---|---|---|

| Open Rate | ~21% | 26%+ | 5–23% increase |

| Click-Through Rate | 2.6% | 3.5–4% | 35–54% increase |

| CTA Performance | Baseline | 202% better | 3× improvement |

Personalized emails don’t just perform better - they generate three to five times more revenue per recipient compared to generic campaigns. To make your tests count, set clear goals upfront. For instance, aim for a 5% bump in open rates or a 10% climb in click-through rates. Tools like Breaker can help streamline the process by automating lead generation, targeting the right audience, and providing real-time analytics, making it easier to turn test results into actionable insights.

Setting Up Personalization Tests

Turning data into actionable insights through personalization testing requires clear goals, thoughtful strategies, and efficient tools.

Planning Your Test

Start by setting specific, measurable goals. Instead of aiming for something vague like "boost engagement", focus on concrete outcomes - such as increasing open rates by 10% or doubling click-through rates. These benchmarks make it easier to evaluate your success.

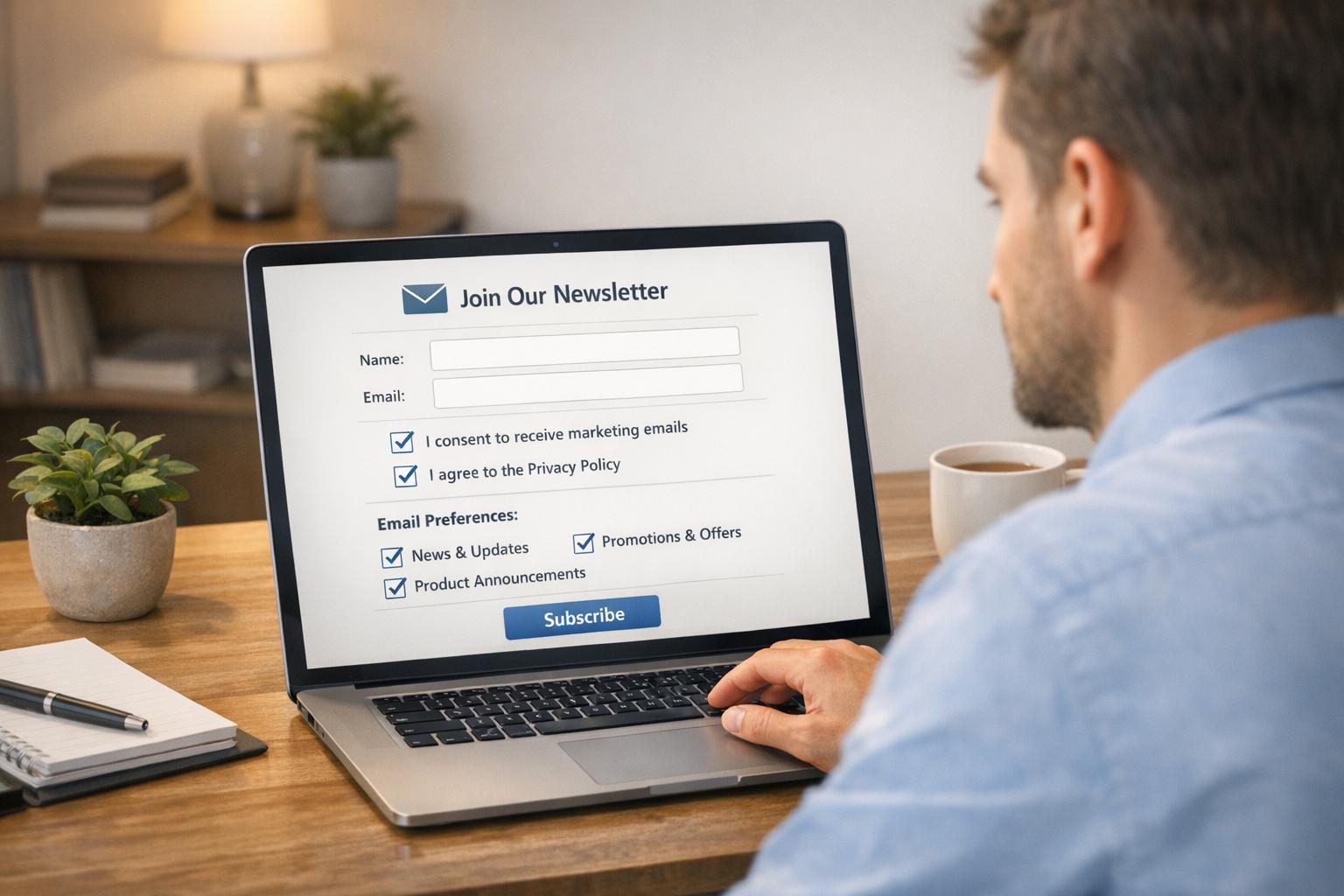

Accurate audience segmentation is another key step. Divide your audience into meaningful groups based on factors like behavior, role, company size, or engagement history. For instance, you could separate IT managers from marketing directors, or compare startups with large enterprises. You might even look at engagement patterns, such as frequent openers versus occasional readers. The more tailored your segments, the more useful your test results will be.

When testing, prioritize high-impact elements like subject lines, call-to-action buttons (CTAs), and dynamic content. To keep your results clear and actionable, test one variable at a time using A/B tests.

Once your goals and audience segments are set, it’s time to decide on the right testing method.

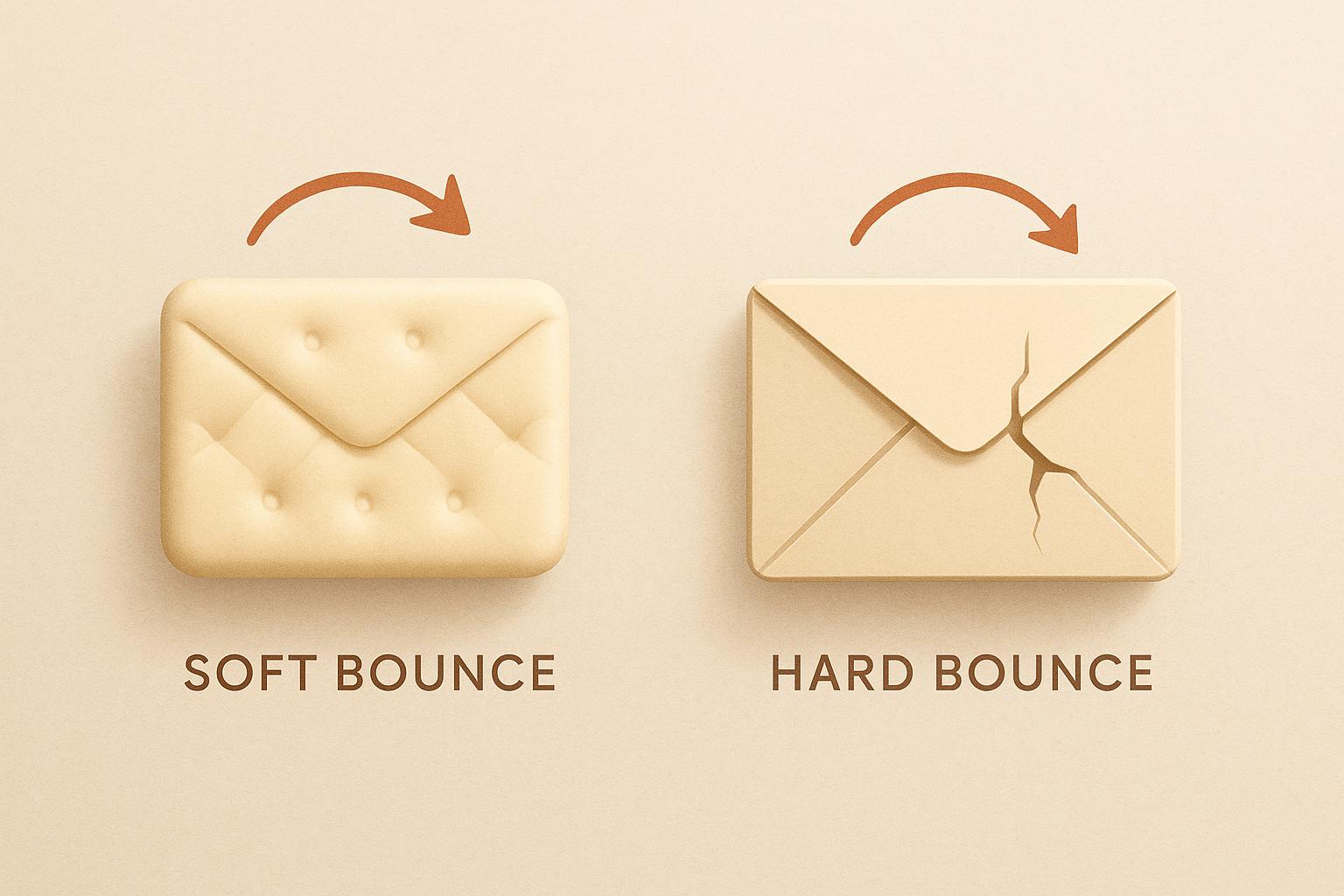

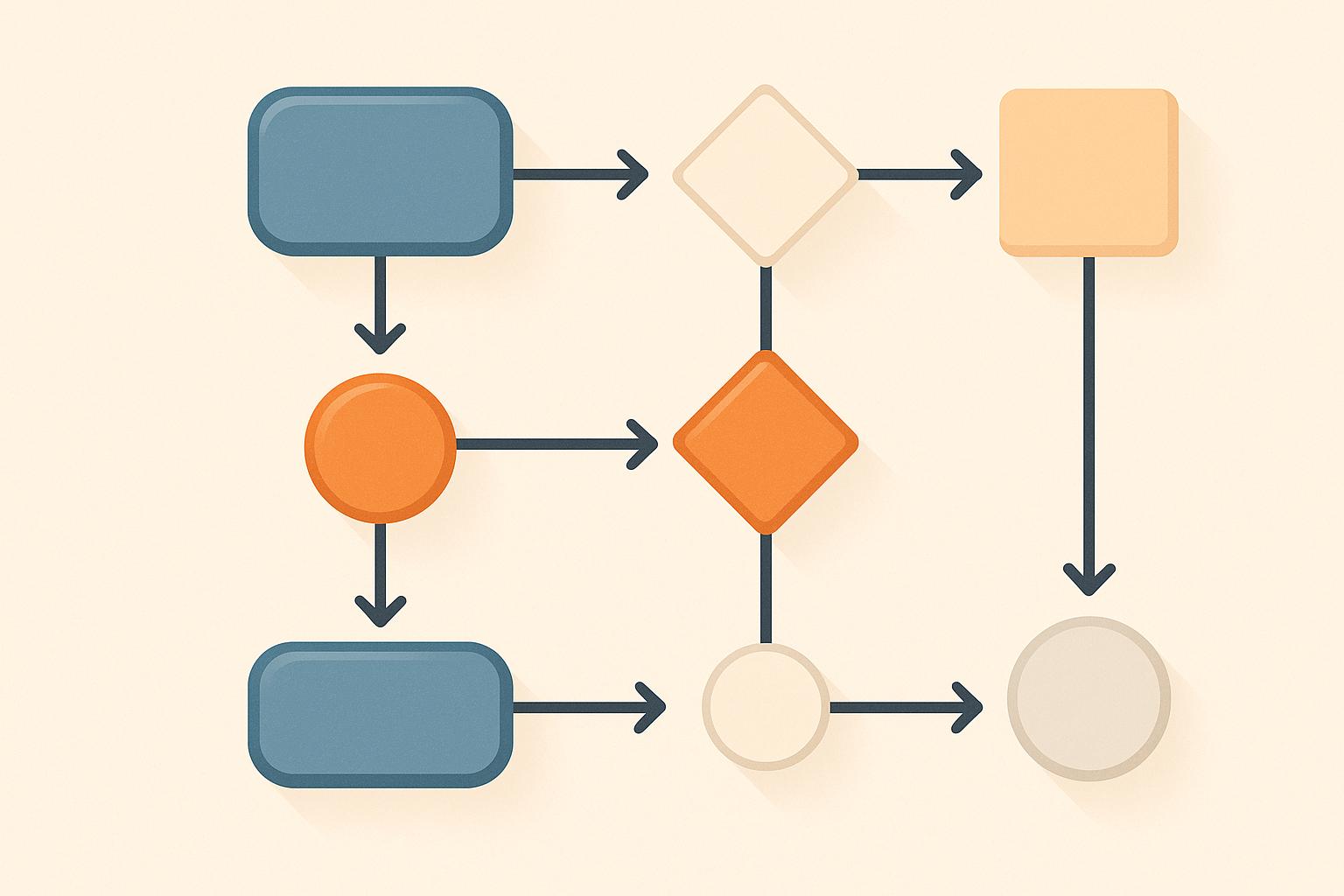

Running A/B and Multivariate Tests

A/B testing is a staple for email personalization. It involves creating two versions of your email that differ by just one element - like a personalized subject line versus a generic one. Recipients are randomly assigned to each group, which helps eliminate bias. Make sure each group is large enough to ensure statistical significance.

For a deeper dive, multivariate testing allows you to test multiple elements at once, such as combining different subject lines with various CTAs. This method provides insights into how these elements interact, but it does require larger sample sizes and more complex analysis.

To ensure reliable results, send all email variants at the same time. Consistent scheduling across test groups minimizes the impact of external factors.

| Testing Method | Best For | Sample Size Needed | Complexity |

|---|---|---|---|

| A/B Testing | Single variable changes | 1,000+ per variant | Low |

| Multivariate Testing | Multiple element combinations | 5,000+ total | High |

| AI-Powered Testing | Real-time optimization | Varies | Medium |

Using Tools like Breaker

After choosing your testing method, automation tools can simplify and speed up the process. Breaker, for example, offers a suite of features to streamline testing, including automated audience targeting, a user-friendly newsletter builder, and real-time analytics to track open and click-through rates. Its algorithm matches B2B subscribers to your ideal customer profile, ensuring your test groups are both relevant and engaged.

The platform’s newsletter builder makes it easy to design test variants, incorporating personalized links, CTAs, and dynamic content in just a few clicks. This efficiency helps you test more quickly and effectively.

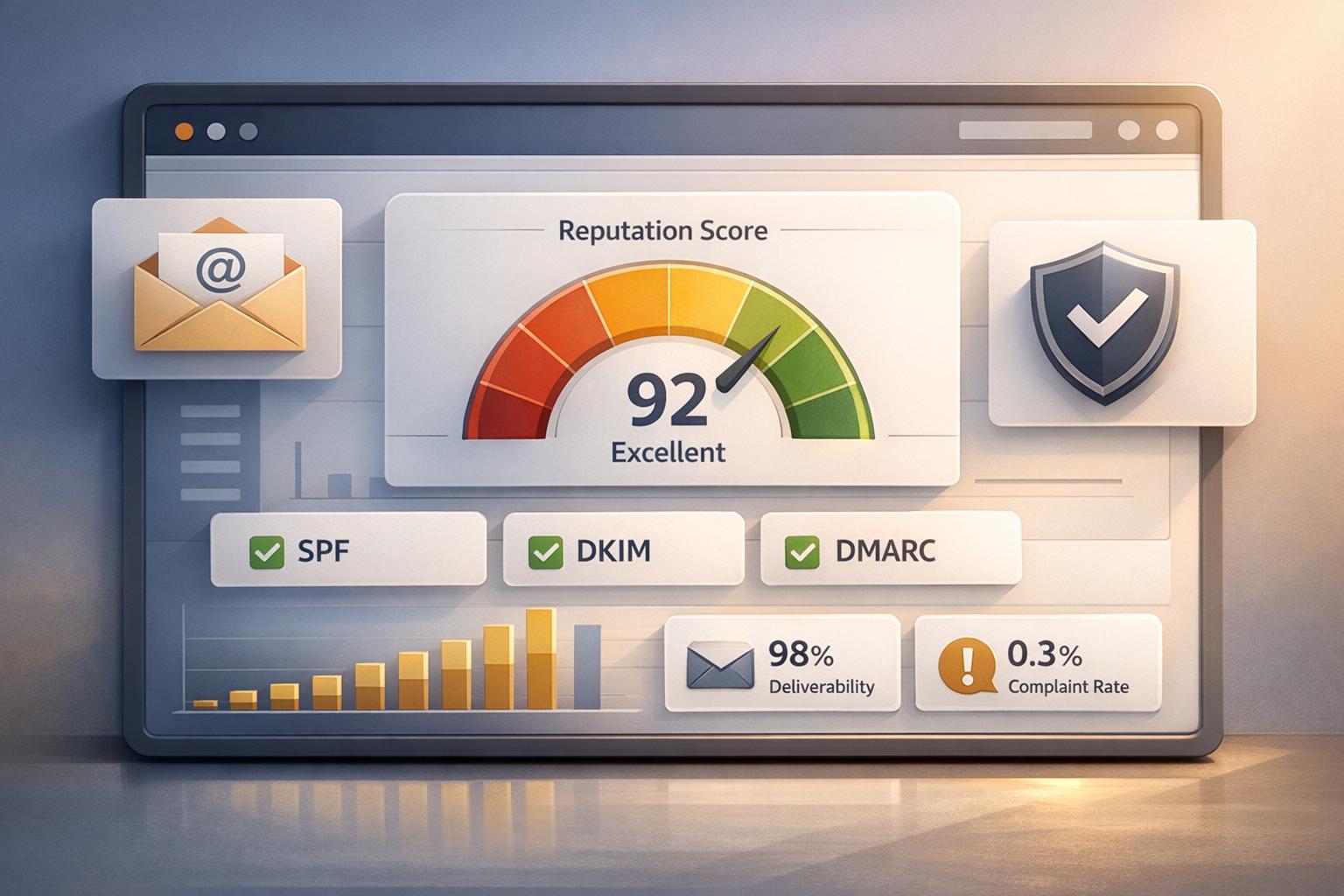

Breaker also handles deliverability by automating tasks like managing mail streams, maintaining clean email lists, and monitoring sender reputation. This ensures your test emails reliably reach their intended inboxes, removing a major variable from your results.

With an average open rate of 40% and a click-through rate of 8%, Breaker provides a strong foundation for testing. Its 4.8/5 satisfaction rating among B2B marketers highlights its ability to combine technical reliability with an intuitive user experience.

"We tripled our sponsor revenue and doubled our community memberships with Breaker. Well over a 10X ROI." - Peter Lohmann, CEO, RL Property Management

sbb-itb-8889418

Analyzing Test Results

Once your tests are complete, it’s time to dig into the data and use it to fine-tune your future campaigns.

Metrics to Track

Open rates are a good indicator of how well your personalization efforts are working. On average, the industry sees open rates around 21%, but personalized campaigns often push this number to 26% or more. Breaker users, however, report even better results, with an impressive average open rate of 67% across active campaigns.

Click-through rates (CTR) reveal how engaging your content is once recipients open your email. While the industry average sits at about 2.6%, personalized campaigns generally achieve between 3.5% and 4%. Breaker users, leveraging targeted messaging, see an extraordinary 44% average CTR.

Conversion rates show the ultimate success of your campaign by tracking how many recipients take the desired action - whether it’s downloading a resource, signing up for a demo, or making a purchase. This metric ties your personalization efforts directly to measurable business outcomes.

Reply rates are especially critical for B2B campaigns. A higher reply rate reflects deeper engagement and interest, showcasing the effectiveness of your personalized outreach.

| Metric | Industry Average | Personalized Campaigns | Breaker Users |

|---|---|---|---|

| Open Rate | 21% | 26%+ | 67% |

| Click-Through Rate | 2.6% | 3.5–4% | 44% |

| Conversion Rate | Varies by industry | 3-5x higher revenue | - |

By analyzing these metrics, you can make targeted adjustments to your campaigns, ensuring that each test builds on the last for even better results. These numbers also help confirm whether observed changes are statistically meaningful.

Understanding Statistical Significance

Statistical significance helps determine if the differences in your test results are real or just random noise. For example, if a personalized subject line boosts open rates compared to a generic one, statistical significance verifies that the improvement isn’t a fluke. Most email platforms use a 95% confidence threshold to declare results significant.

To achieve reliable results, you need a large enough sample size. Testing with only 100 recipients per variant rarely provides trustworthy insights, but 1,000+ recipients per variant usually does. Running tests over a full business cycle (typically a week) also helps capture daily fluctuations in email behavior.

Keep in mind that external factors like holidays or major industry events can skew your results. If you can’t avoid testing during these periods, be sure to account for these anomalies when analyzing your data.

Breaker’s real-time analytics take the guesswork out of statistical significance by automatically calculating it for you. This ensures your tests are solid and your conclusions are reliable. Once validated, these insights can be used to sharpen your campaign strategies.

Applying Insights to Future Campaigns

The real value of testing lies in applying what you’ve learned to improve future campaigns.

Start by identifying recurring patterns. If personalized subject lines consistently outperform generic ones, make personalization a standard part of your strategy. Similarly, if dynamic content blocks generate higher click-through rates across various audience segments, incorporate them more broadly.

Segment-specific insights can be particularly powerful. For example, you might discover that C-level executives respond better to industry-focused messaging, while mid-level managers prefer role-specific content. These details enable you to fine-tune your campaigns for each audience.

When certain elements underperform, address them systematically. For instance, if personalized calls to action aren’t converting despite strong click-through rates, take a closer look at your landing page or the offer itself. Keeping a detailed testing log - tracking what worked, what didn’t, and key factors like audience segments and sample sizes - can help you spot trends over time.

"Breaker is our #1 source of booked calls." - Brennan Haelig, CEO, Jumpstart ROI

Breaker’s intuitive dashboard simplifies the process of applying these insights. With clear data visualizations and precise targeting tools, you can confidently scale successful personalization tactics across similar audience segments.

Best Practices and Common Mistakes

When running email personalization tests, precision is key. Focus on isolating one variable, segmenting your audience thoughtfully, and establishing measurable goals to guide your efforts.

Proven Testing Strategies

Isolate one variable per test to ensure the results are easy to interpret. Changing multiple elements at once makes it impossible to pinpoint what drove the performance shift. For example, testing a generic subject line against an industry-specific one led to a 22% higher open rate and a 15% increase in click-through rate.

Segment your audience wisely based on factors like industry, company size, job roles, or engagement history. This approach yields more actionable insights than testing on a broad, undifferentiated list. For instance, testing personalized calls-to-action with executives versus mid-level managers can highlight distinct preferences.

Establish clear, measurable goals for each test. Instead of vaguely aiming for "better results", set specific targets - like a 15% increase in open rates or a 0.5 percentage point boost in click-through rates. Defined objectives help you assess outcomes and make confident decisions.

Use adequate sample sizes to ensure your results are reliable. Testing with fewer than 100 recipients per variant often leads to unreliable conclusions, while 1,000+ recipients per variant typically provides dependable data. Running tests over a full business cycle, such as a week, also accounts for daily fluctuations in email engagement.

Mistakes to Avoid

Even with a solid strategy, certain missteps can derail your efforts.

Testing multiple variables at once leads to ambiguous results. If you adjust several elements simultaneously, you won't know which change influenced performance, leaving you with unclear next steps.

Overlooking statistical significance can result in wasted resources. A slight improvement - like a 5% higher open rate - might not be meaningful without proper validation. Aim for a 95% confidence level to ensure your results are dependable.

Overdoing personalization can backfire. While personalization often boosts engagement (e.g., personalized campaigns can drive 3–5× higher revenue per recipient compared to generic ones), excessive details like stacking multiple personal elements in a subject line can feel intrusive, leading to "personalization fatigue."

Ignoring external factors can skew your results. Events like holidays, industry conferences, or economic announcements can influence email performance. If testing during such periods, document the context and consider retesting under more typical conditions.

Rushing decisions undermines the process. Checking results too soon - after just a few hours or a day - doesn’t allow enough time to gather meaningful data. Let tests run their full duration for reliable insights.

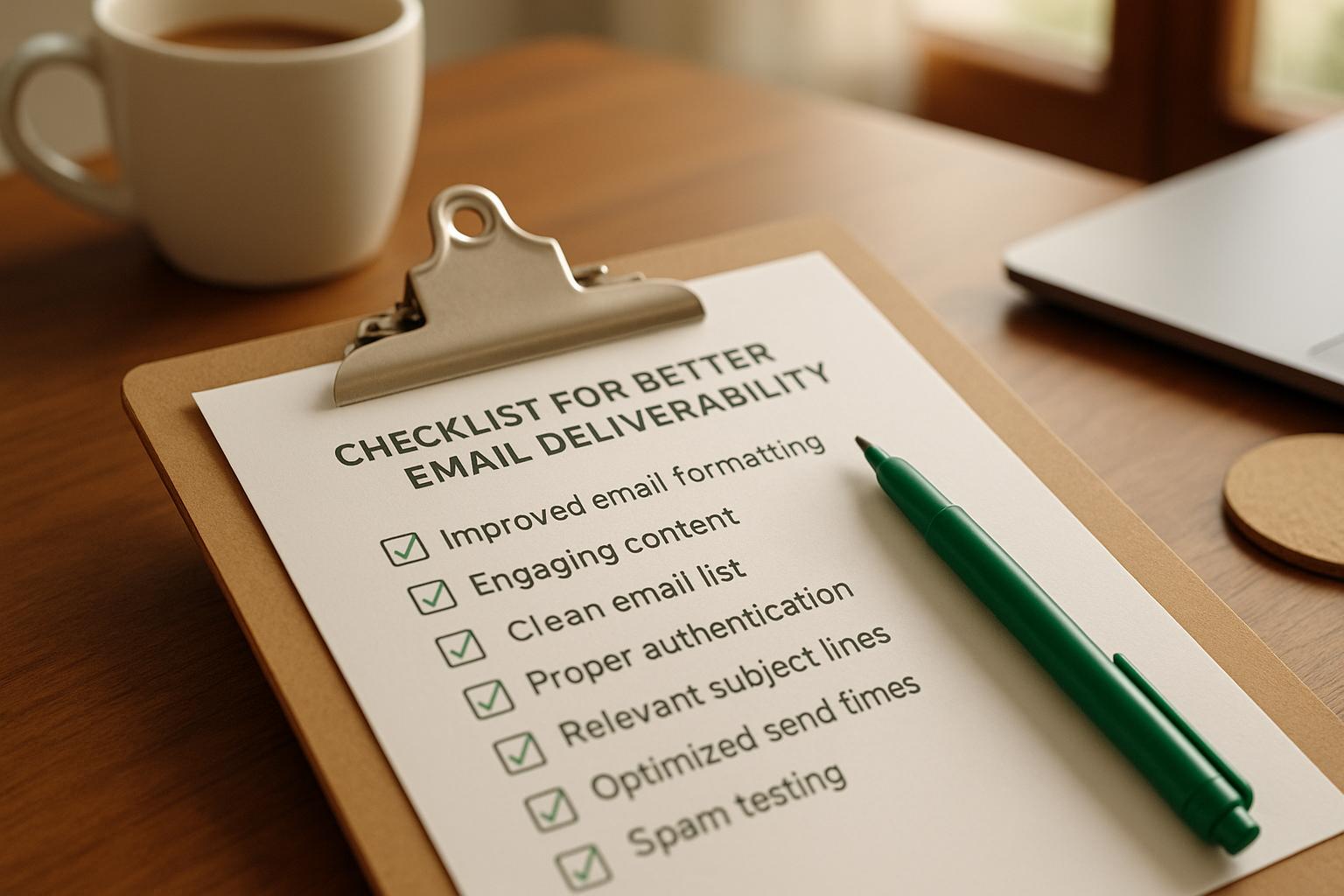

Personalization Testing Checklist

Use this checklist to streamline your testing process and optimize outcomes:

- Define measurable objectives that clearly outline what you aim to achieve and how success will be evaluated.

- Test one variable at a time to ensure you can attribute results to specific changes.

- Segment your audience thoughtfully, using criteria like industry, job role, or engagement history. Ensure each segment has enough recipients (ideally 1,000+ per variant) for statistically significant results.

- Develop distinct test variations that are likely to influence behavior. Avoid making changes so minor they’re unlikely to yield noticeable differences.

- Double-check personalization fields to ensure dynamic content displays correctly across all email clients.

- Set up robust tracking for key metrics like open rates, click-through rates, conversion rates, and unsubscribes.

- Document your test setup, including audience segments, sample sizes, duration, and any external factors that could impact results.

- Monitor for statistical significance, but avoid making decisions prematurely. Allow the test to run its full planned duration.

- Analyze results across all metrics, not just your primary goal, as secondary insights can be equally valuable.

- Apply findings to future campaigns by scaling successful tactics and identifying patterns. Tools like Breaker's analytics platform can simplify this process.

Conclusion

Testing email personalization is a key strategy for B2B marketers aiming to improve engagement and drive better results. Personalized campaigns consistently outperform generic ones, delivering higher engagement rates and boosting revenue.

Key Takeaways

The main takeaway here is that ongoing testing fosters a data-driven approach to email marketing. By experimenting with personalization elements like subject lines, dynamic content, and calls-to-action, you can make measurable improvements in performance. These efforts lead to more qualified leads and shorter sales cycles.

Tools like Breaker simplify the testing process by automating audience segmentation, offering real-time analytics, and enabling precise targeting. This allows marketers to focus on interpreting the results and applying the insights to future campaigns. Designed specifically for B2B marketers, Breaker includes features like automated lead generation and high deliverability rates, making personalization testing both efficient and reliable.

Remember, the process of testing is just as important as the outcomes. Ensuring statistically significant results means isolating one variable per test, using large enough sample sizes, and running tests for the right duration. However, balance is crucial - overdoing personalization can lead to audience fatigue.

Use these insights to refine your approach and achieve consistent improvements in your email campaigns.

Next Steps for Marketers

Take these insights and put them into action to sharpen your email strategy. Start by reviewing your current campaigns to identify areas ripe for personalization testing. Begin with simpler elements like subject lines or sender names before moving on to more complex features like dynamic content. These initial tests often yield valuable insights and help build confidence in your process.

Leverage unified customer data to create highly relevant campaigns. Clearly defining your Ideal Customer Profile (ICP) will help you segment your audience effectively, ensuring your messages resonate with each group.

Schedule regular tests to keep pace with changing customer expectations and industry trends. Make strategic choices about what to test next, focusing on quick wins that can immediately enhance performance.

The lessons from email personalization testing don’t stop at email campaigns. Use the preferences and insights you uncover to inform other areas of your marketing strategy, from content creation to social media and even sales conversations. This approach multiplies the impact of your efforts.

For inspiration, consider companies like NA-KD, which saw a 72× return on investment and a 25% increase in customer lifetime value by adopting a systematic testing process and refining their personalization strategies. Your journey toward better email personalization can start with the very next email you send.

FAQs

How do I calculate the right sample size for testing email personalization?

Determining the right sample size for email personalization testing is all about finding a balance between accuracy and practicality. Start by clarifying your goals - whether that's boosting open rates, click-through rates, or another metric. Then, establish a confidence level (typically 95%) and a margin of error (like ±5%) to guide your calculations.

To figure out the sample size, you can use an online calculator or a statistical formula. These tools take into account your audience size, the expected response rate, and the number of test variations. If you're working with a smaller email list, aim to test with at least 10-20% of your total audience. This approach ensures you gather meaningful insights without using up the majority of your list before identifying the best-performing version.

Remember, testing with too small a sample can lead to unreliable results, while larger samples yield more precise data but may not always be practical. Adjust your approach based on the unique needs of your campaign and the size of your audience.

What mistakes should I avoid when running A/B tests for email personalization?

To run successful A/B tests for email personalization, steer clear of these common mistakes:

- Testing too many variables simultaneously: Stick to one element at a time - like subject lines, CTAs, or personalization tags - so you can pinpoint what’s driving the results.

- Using too small of a sample size: Make sure your test reaches enough recipients to yield statistically reliable outcomes.

- Stopping tests prematurely: Give your test ample time to collect meaningful data. Depending on your audience size, this usually means running it for at least a few days.

- Overlooking audience segmentation: Personalization works best when it’s tailored to the specific audience segment you’re targeting.

Avoiding these pitfalls will help you gather clearer insights and make your email personalization strategies more effective.

How can I use insights from email personalization tests to enhance my overall marketing strategy?

Email personalization tests offer more than just insights for your inbox - they can shape your entire marketing strategy. For example, if a specific subject line sparks high engagement, consider using a similar tone or phrasing in your social media posts or website content. Similarly, the types of content that resonate in emails can help you decide what topics or formats to focus on for blogs, ads, or other platforms.

By diving into these test results, you’ll gain a clearer picture of what your audience connects with, allowing you to craft messaging that’s more targeted and effective across the board. Using these insights keeps your marketing consistent and ensures your efforts hit the mark every time.