A/B Testing Email Campaigns for Higher Engagement and ROI

A/B testing email campaigns helps you improve engagement by testing what works—subject lines, content, CTAs, and more. Instead of guessing, you use real data to optimize performance and drive conversions.

If you’re focused on growing your B2B newsletter or improving lead quality, A/B testing is a must-have tactic. Breaker simplifies this process by automating list building and deliverability, so you can focus on running smarter campaigns.

Explore this guide to learn how to set up effective A/B tests, avoid common mistakes, and apply your results to future sends.

What Is A/B Testing Email Campaigns?

A/B testing lets you compare different versions of your email to see which one works better. By testing small changes, you can improve essential metrics like open rates, clicks, and conversions. It helps you make smarter decisions instead of guessing what your audience prefers.

Definition and Core Concepts

A/B testing in email marketing means sending two versions of the same email to separate groups of your subscribers. You change one element at a time, like the subject line or call to action. This method shows you which version gets better results.

You randomly split your audience so that both groups are similar. This makes your data more reliable. After the test, you measure performance with key metrics such as open rate, click-through rate, or conversion rate. The version with higher results becomes your primary email.

Benefits of A/B Testing for Email Marketing

A/B testing helps you avoid costly mistakes by finding what works best before sending to your full list. It gives you clear data to back your decisions and boosts your email engagement over time.

You learn what your audience cares about, like which headlines or images catch their attention. This can increase your newsletter growth and lead generation.

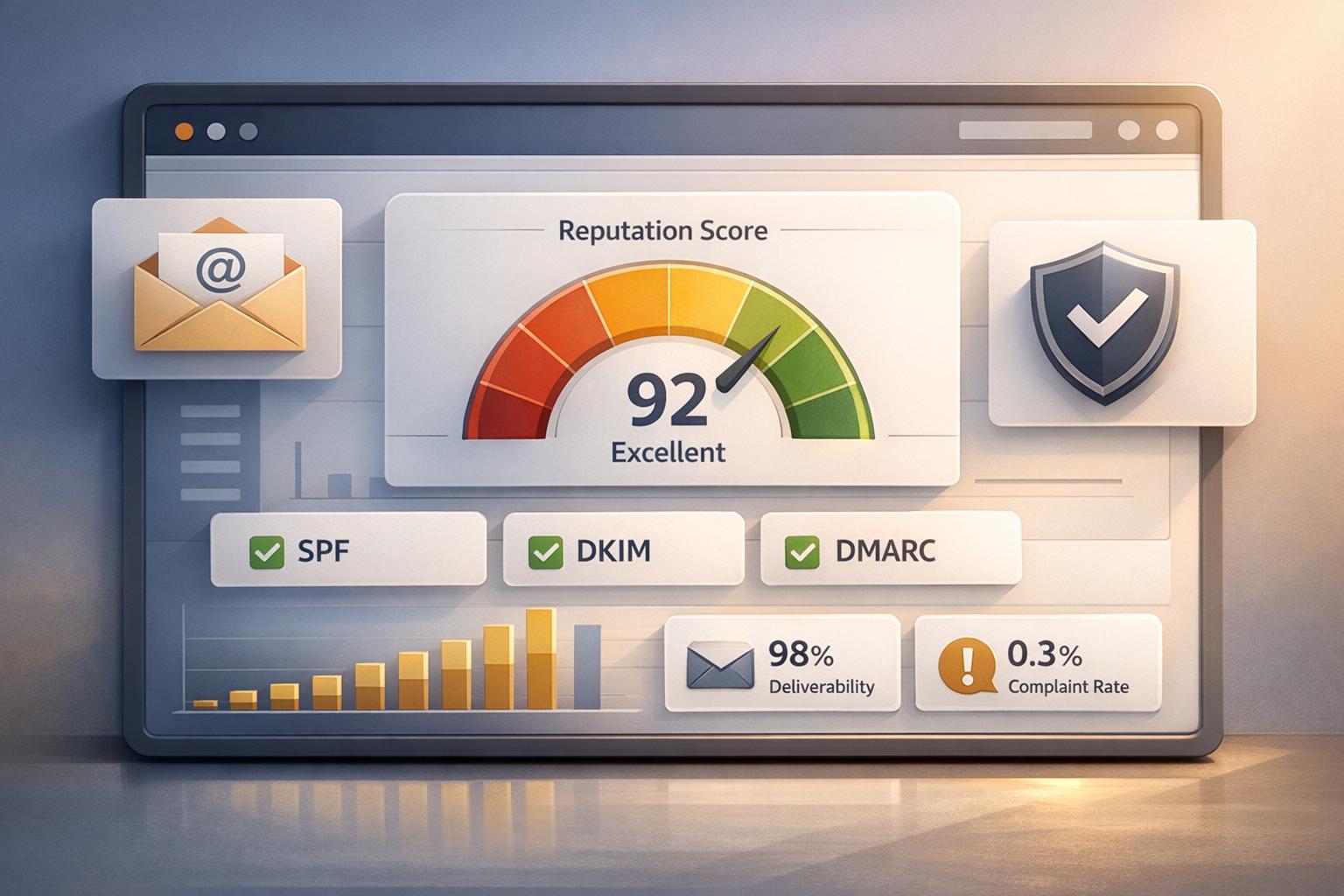

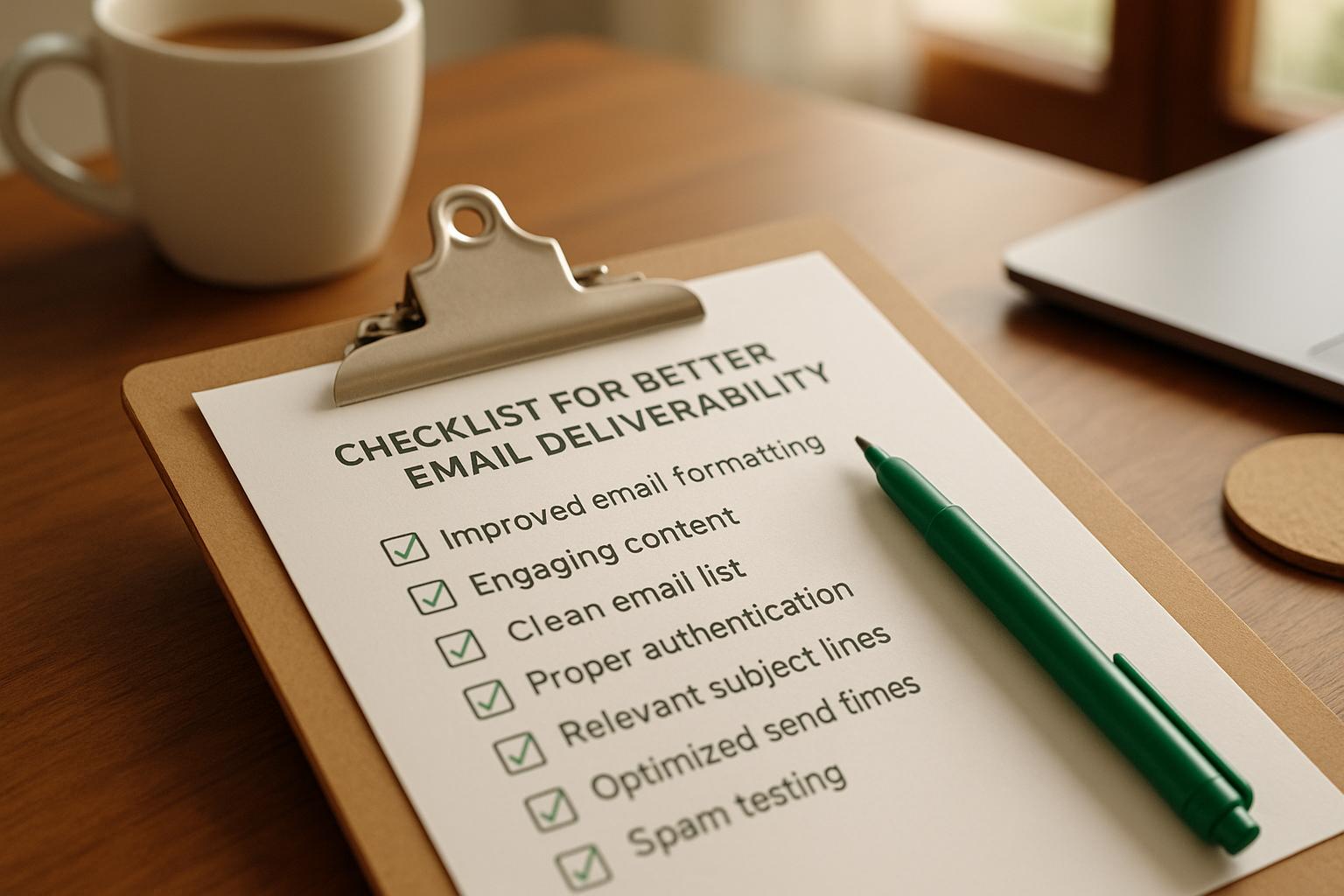

Testing also helps improve your email deliverability and overall ROI by avoiding spam triggers or low engagement elements.

Common Elements to Test

Focus on elements that affect engagement and conversion, such as:

- Subject lines: Different words or lengths

- Email content: Short vs. long copy, tone changes

- Call-to-action (CTA): Button colors, text, and placement

- Send time: Morning vs. afternoon or different weekdays

- Sender name: Brand name vs. individual’s name

Start with one change per test to know exactly what impacts your results. Track the data carefully and use those insights to keep improving your campaigns. Automation can help run these tests while growing your list with the right subscribers.

Setting Up A/B Tests for Email Campaigns

To get the most from your A/B tests, pick the right elements to change, divide your audience in smart ways, and decide how to measure success. These steps help you learn what works and improve your email results step by step.

Choosing the Right Variables to Test

Decide which part of your email might affect performance most. Common choices include subject lines, sender names, call-to-action buttons, or images. For example, testing two different subject lines can show which grabs attention better.

Change one element at a time to pinpoint what actually impacts your open or click rates. If you test subject lines and images together, you won’t know which made the difference.

Testing the subject line’s tone or the placement of your CTA can reveal small tweaks that drive more engagement.

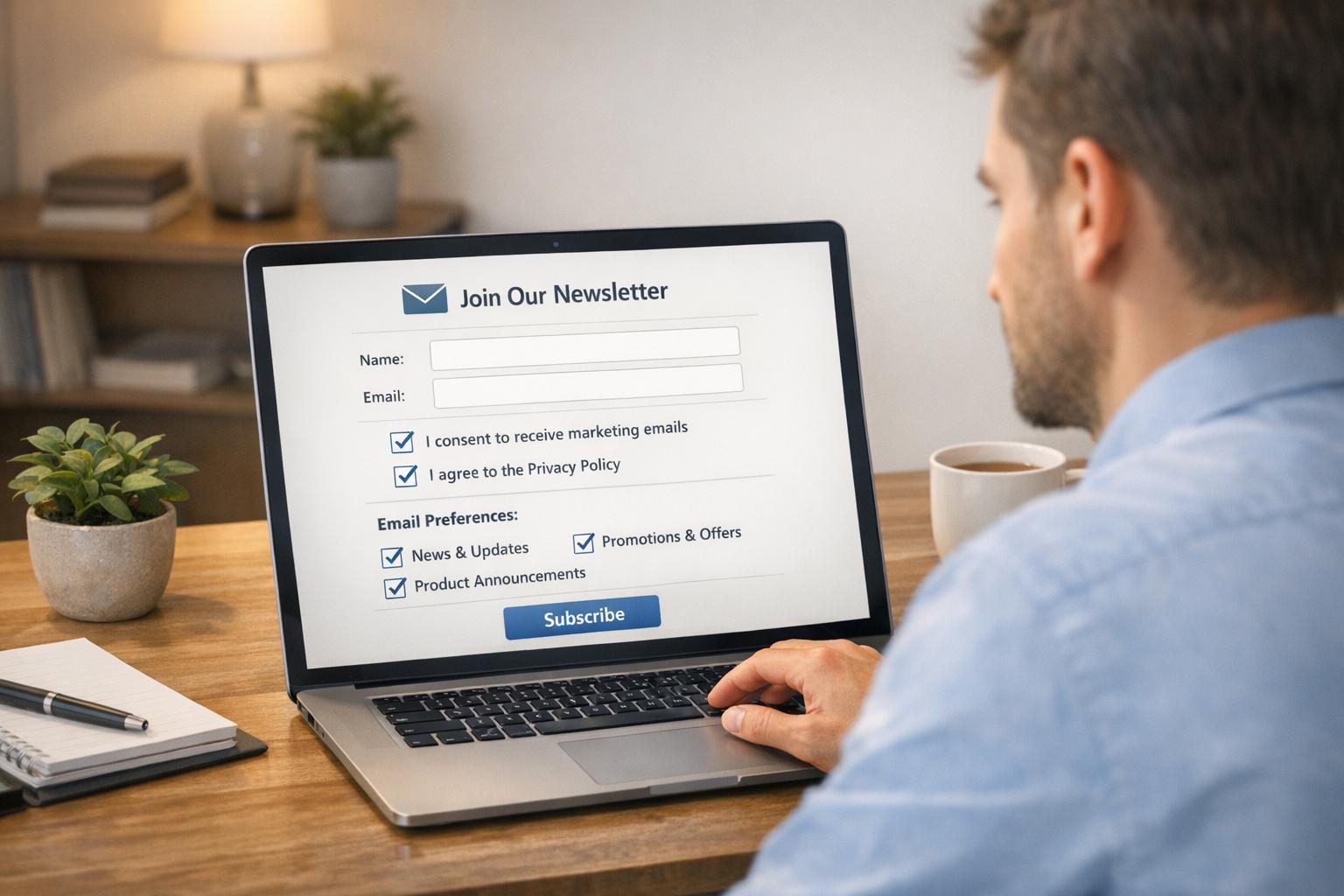

Segmenting Your Audience Effectively

Divide your email list into equal groups that represent your whole audience. Make sure the segments are random to avoid bias. This keeps your test results reliable.

If you have 10,000 subscribers, split them into two groups of 5,000 for each version of your email. You can also segment by industry or job role to see if different messages work better for specific groups.

Avoid overlap between segments. Each subscriber should only receive one test version.

Selecting Success Metrics

Pick clear goals to judge your test’s performance. Open rate, click-through rate, and conversion rate are popular choices to measure interest and action.

Define your priority ahead of time. If your goal is more newsletter sign-ups, track click-throughs, or form completions. If you want to know which subject line boosts opens, focus on the open rate.

Watch secondary metrics like unsubscribe rate or spam complaints to make sure your changes don’t hurt your list's health. Trusted tools make it easy to monitor these numbers and adjust quickly.

Executing Successful A/B Tests

To get the most from your A/B tests, set clear goals, prepare your test carefully, and focus on the data. Test smart elements, keep control groups steady, and avoid mistakes that can skew results.

Best Practices for Testing

Pick one variable to test at a time, like subject lines, send times, or call-to-action buttons. Testing multiple variables at once makes it hard to know what caused changes.

Set a clear goal before you start, such as increasing open rates or clicks. This helps you measure success.

Use a large enough sample size to get valid results. Too small a group can lead to random swings instead of real insights. Aim for at least a few hundred contacts per group when possible.

Track your results over a set period—usually 24 to 72 hours after send. This is when most engagement happens.

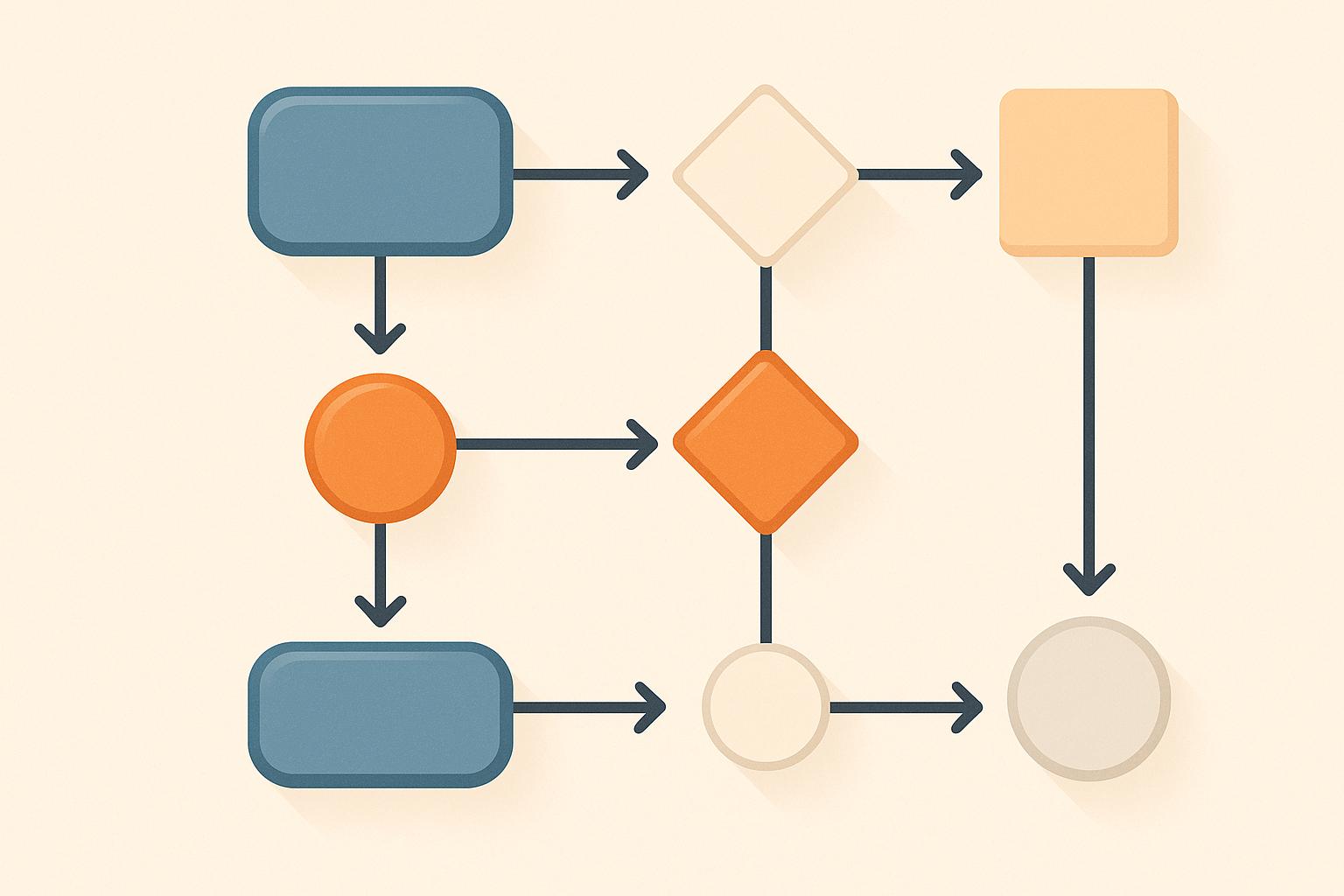

Running Control and Variation Emails

Your control email is the original version and serves as the baseline. Send this to half your test group. The variation email includes the change you want to test and goes to the other half.

Make sure the only difference between the two is the variable you’re testing. If subject lines differ, keep everything else the same—design, content, send time.

Send the control and variation emails at the same time. This avoids timing differences affecting your results. If you run tests regularly, create a repeatable process to keep things consistent.

Avoiding Common Pitfalls

Avoid testing too many things at once. It causes confusion and unreliable data. Stick to one test per campaign.

Let your test run long enough to collect solid data. Check your sample size and engagement before deciding to stop.

Make sure your groups are similar in size and type for a fair test.

Watch for external events that might influence results, like holidays or major news. These can skew your data.

Analyzing and Interpreting Results

To get value from your A/B tests, you need to understand what the numbers mean. Check if your results are reliable, track the right metrics, and decide your next move based on the data.

Understanding Statistical Significance

Statistical significance tells you if a result is likely real, not just due to random chance. In email A/B testing, a common benchmark is a 95% confidence level. If your test meets this, you can be fairly sure the difference between versions is genuine.

For example, if one email version has a 20% open rate and the other 18%, significance testing helps you see if that 2% gap matters. Use tools or software to calculate this easily.

Measuring Key Performance Indicators

Focus on metrics that align with your campaign goals. Typical KPIs for email tests include:

- Open rate: How many recipients opened your email.

- Click-through rate (CTR): The percentage of clicks on links.

- Conversion rate: Actions like signups or purchases.

- Unsubscribe rate: If your message turns people away.

Compare these numbers between your email versions to see which one performs better. Consider how the metrics work together. For example, a higher open rate with a low CTR might mean your subject line is strong, but the content needs work.

Actionable Next Steps After Testing

Once you know which version wins, use the better-performing elements in future emails. If results aren’t significant, try retesting with a larger sample or tweaking your approach.

You can segment your list to test in specific groups, like industry or role, for deeper insights. Use the data to refine your messaging, design, or send time. Automation tools help you apply winning tests efficiently, growing your B2B subscribers without extra manual work.

Optimizing Future Email Campaigns with A/B Testing

To keep improving your email campaigns, use the data from your A/B tests to make smart changes. Adjust your approach based on what your audience responds to, and apply those lessons in a repeatable way.

Continuous Improvement Strategies

When you run A/B tests, look at specific metrics like open rates, click-through rates, and conversion rates. Use these numbers to figure out what works best. For example, test different subject lines or send times, and keep what drives better engagement.

Test one element at a time for cleaner results. If your emails get more clicks when the call-to-action is clearer, use that insight in future campaigns. Over time, small improvements add up to better performance.

Make A/B testing part of your regular workflow. Even after finding winning tactics, audience preferences can change. Continuous testing helps you stay relevant and keeps your email list growing with quality subscribers.

Incorporating Learnings into Strategy

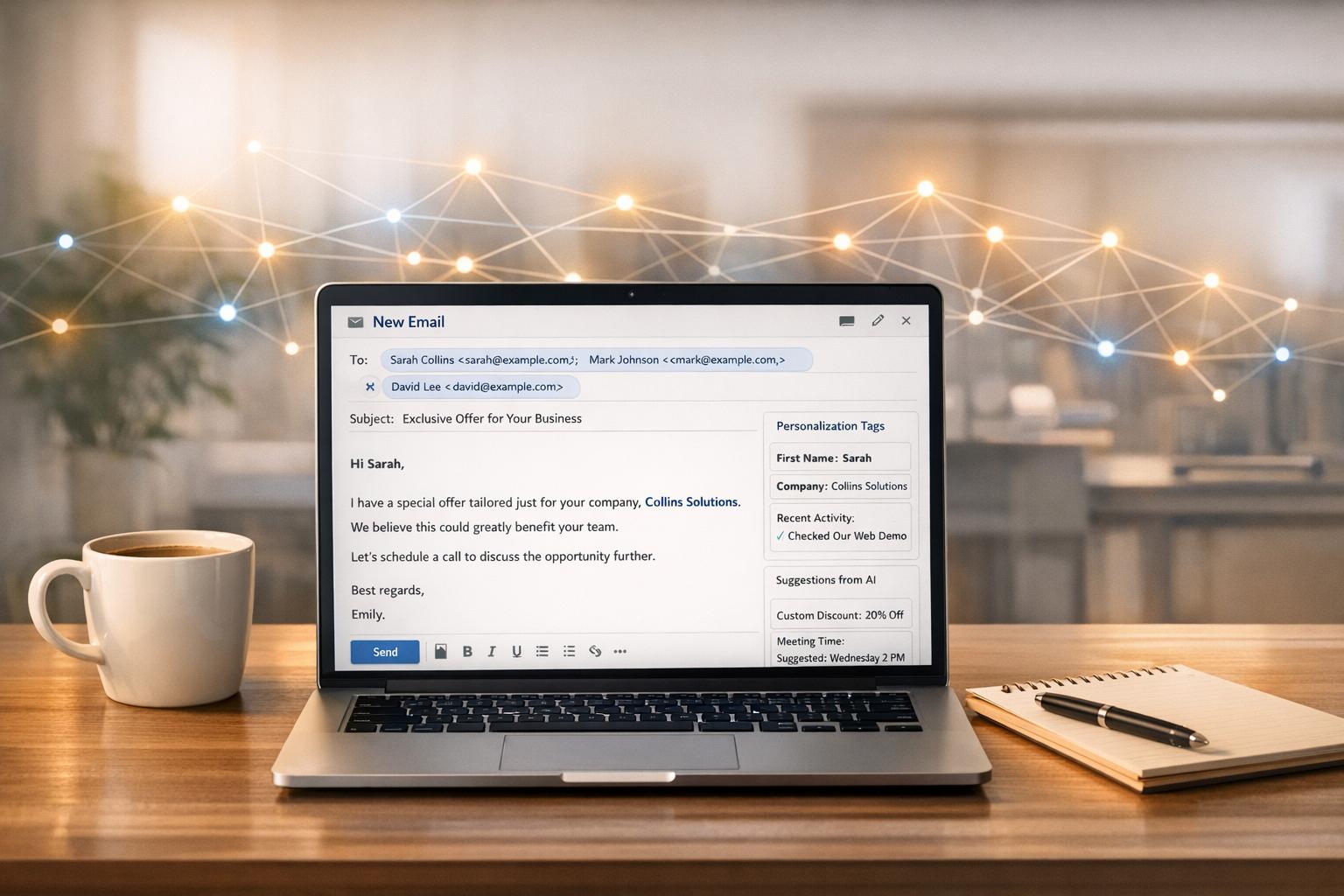

After gathering results, update your email templates and content plans to reflect the winning elements. For instance, if personalized greetings boost engagement, make that a standard part of your emails.

Use the insights to refine your audience targeting. If you see higher engagement from certain industries or job roles, focus your list-building efforts there. Some platforms can automate some of this by helping you reach the right B2B subscribers at scale.

Document your findings and create a playbook for your team. This keeps everyone aligned and speeds up future campaign building. By turning testing lessons into clear actions, you make your marketing more efficient and avoid repeating past mistakes.

Closing the Loop on Smarter Email Campaigns

A/B testing isn’t just a growth hack—it’s a strategic habit. By testing small, high-leverage elements like subject lines, CTAs, and send times, you reduce guesswork and increase every email’s impact. The key is consistency: test, measure, learn, and apply.

For B2B teams focused on scaling list quality and engagement, tools like Breaker help automate the hard parts, so you can focus on what drives results.

Start growing your list today with smarter leads.

Frequently Asked Questions

Testing different parts of your email can improve open rates, clicks, and subscriber growth. Knowing what to test and how to measure results helps you make better decisions and get more value from your campaigns.

How can I effectively A/B test my email campaign's subject lines?

Try two subject lines that differ in one clear way, like length or tone. Keep everything else the same so you know the subject line caused the difference.

Use a segment of your list for the test, then send the winning subject line to the rest. Make your sample size large enough to get reliable results.

What are some successful A/B testing examples in email marketing?

Testing questions vs. statements in subject lines often shows which gets more opens. Another example is testing personalization, like including a first name versus a generic greeting.

Breaker users see higher engagement by testing the time of day and the sender's name. Small changes here helped them grow their B2B newsletter lists faster.

What are the best practices for conducting A/B tests within email campaigns?

Test one variable at a time to know what makes the difference. Use clear hypotheses like "Will a shorter subject line improve open rates?"

Run tests with enough recipients to be confident in the results. Avoid testing during unusual events that might skew data.

Is it better to send A/B test emails simultaneously or at different times?

Send test emails at the same time to reduce timing biases. Differences in send time can affect results because audience behavior changes throughout the day.

If you can’t send simultaneously, choose similar send windows to keep data consistent.

Can you provide insights on using Mailchimp for A/B testing in email campaigns?

Mailchimp lets you test subject lines, content, and send times with split campaigns. It automatically picks the winner based on the metric you choose, like open rate or click rate.

Define your winning metric before starting to focus your test for better results.

What metrics should I focus on when evaluating the results of an A/B email test?

Look at the open rate for subject line tests and click-through rate for content tests. Track conversion rate if you want people to take specific actions, like sign-ups or sales.

Engagement and unsubscribe rates show if your message connects with your audience or causes them to leave.