Ultimate Guide to CTA Testing in Email Marketing

CTA testing in email marketing is about finding out which call-to-action (CTA) variations work best to drive engagement and conversions. A CTA prompts readers to take specific actions, such as clicking a button, subscribing, or making a purchase. Testing helps identify the most effective design, wording, and placement to improve results.

Here’s the process in a nutshell:

- Set Goals: Define measurable objectives like boosting click-through rates or increasing sign-ups.

- Test Elements: Experiment with button text, colors, placement, and personalization.

- Use A/B or Multivariate Testing: Compare one or multiple variables to see what performs better.

- Analyze Metrics: Focus on click-through rates, conversions, revenue per email, and unsubscribe rates.

- Ensure Reliable Results: Use proper sample sizes, test durations, and statistical significance.

For B2B email marketing, CTA testing is especially critical because decisions often involve multiple stakeholders and longer sales cycles. Tools like Breaker can streamline testing by providing real-time analytics, ensuring high deliverability, and targeting the right audience.

Key takeaway: Testing and refining CTAs is essential for turning email readers into engaged customers. Use data, not guesswork, to optimize your campaigns and achieve better outcomes.

How to Create Powerful Email CTAs That Actually Work

How to Plan Your CTA Test

To get the most out of your CTA tests and elevate your B2B email campaigns, you need a well-thought-out plan. Start by defining specific, measurable goals that guide your testing process.

Set Clear Goals for CTA Testing

The foundation of any successful CTA test lies in having clear, measurable objectives. Without them, it's hard to design effective tests or make sense of the results.

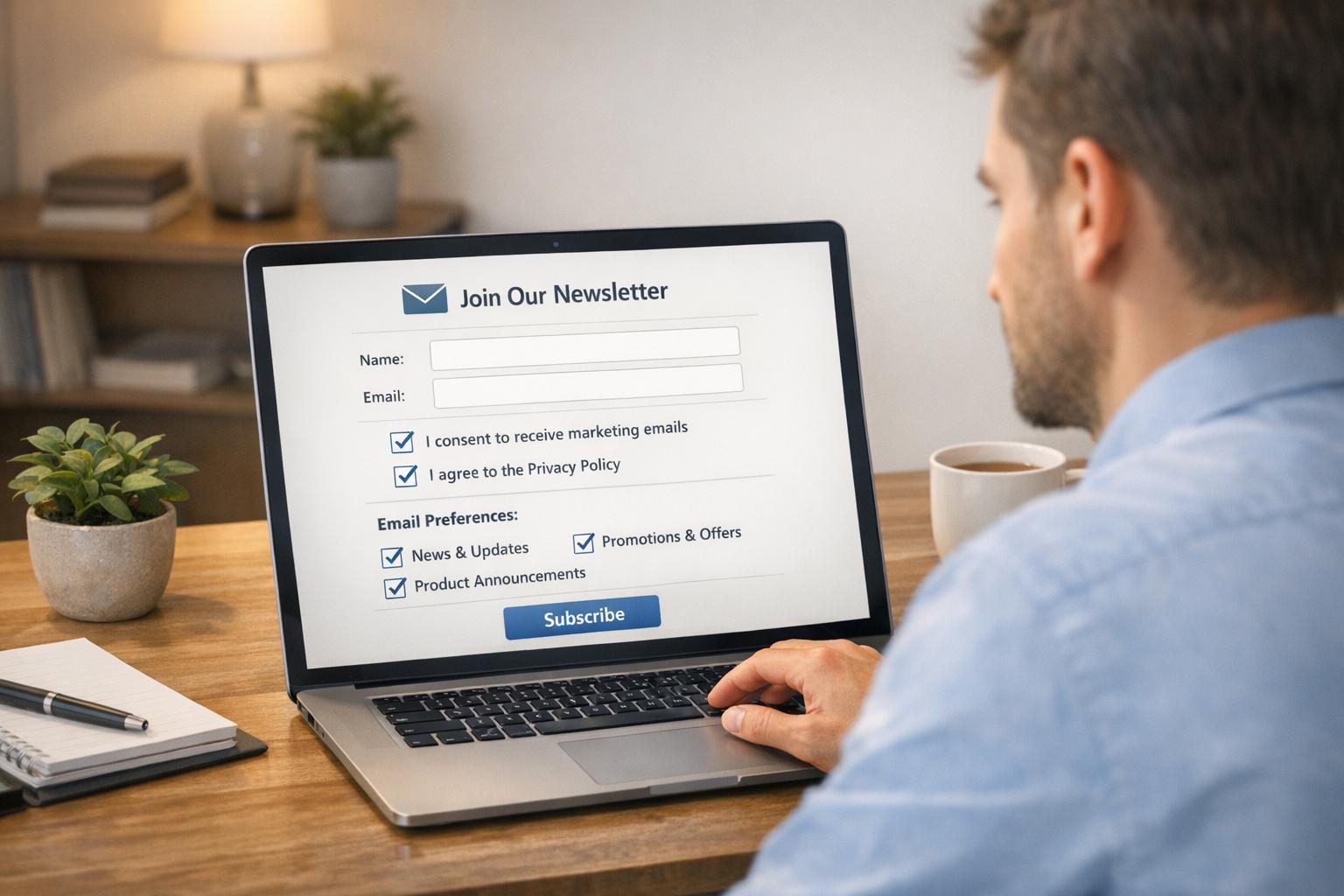

Pinpoint a specific goal, like boosting click-through rates or increasing webinar sign-ups, and tie it directly to metrics that impact revenue or growth. For instance, if your click-through rate is lagging, set a realistic improvement target within a defined timeframe. Make sure your chosen metric aligns with the purpose of your email. A newsletter might focus on engagement metrics like clicks, while a product announcement email should prioritize conversions, such as sign-ups or purchases. For many B2B campaigns, the ultimate goal is often to secure meeting bookings or proposal requests.

Once your goals are locked in, pinpoint the CTA elements that are most likely to influence those metrics.

Choose Which CTA Elements to Test

CTAs are made up of several components, each of which can impact performance. Knowing what to test helps you focus on changes that matter.

- Button Text: Swap out generic phrases for text that highlights value. For example, test "Download Free Guide" against "Get Your Marketing Playbook" or "Schedule Demo" versus "See How It Works."

- Visual Design: Try different button colors, especially those that contrast with your email's background. Also, ensure the button size is user-friendly - large enough for mobile users but not so big that it looks clunky.

- Placement: Where you place the CTA in your email matters. Test positions like immediately after your main value proposition, at the end of the email, or even in multiple locations for longer emails. Placing CTAs in both the upper and lower sections can capture attention at different points of engagement.

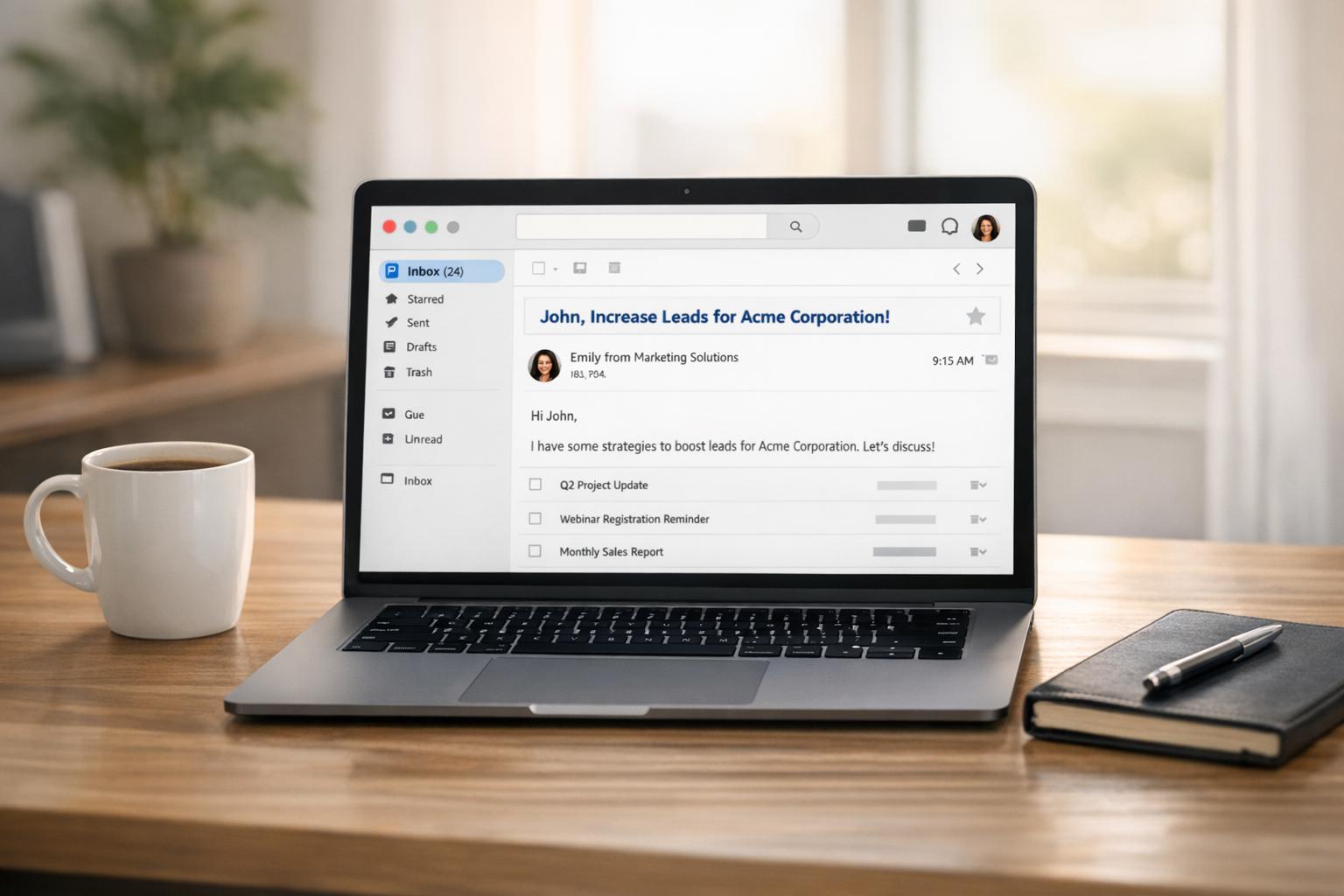

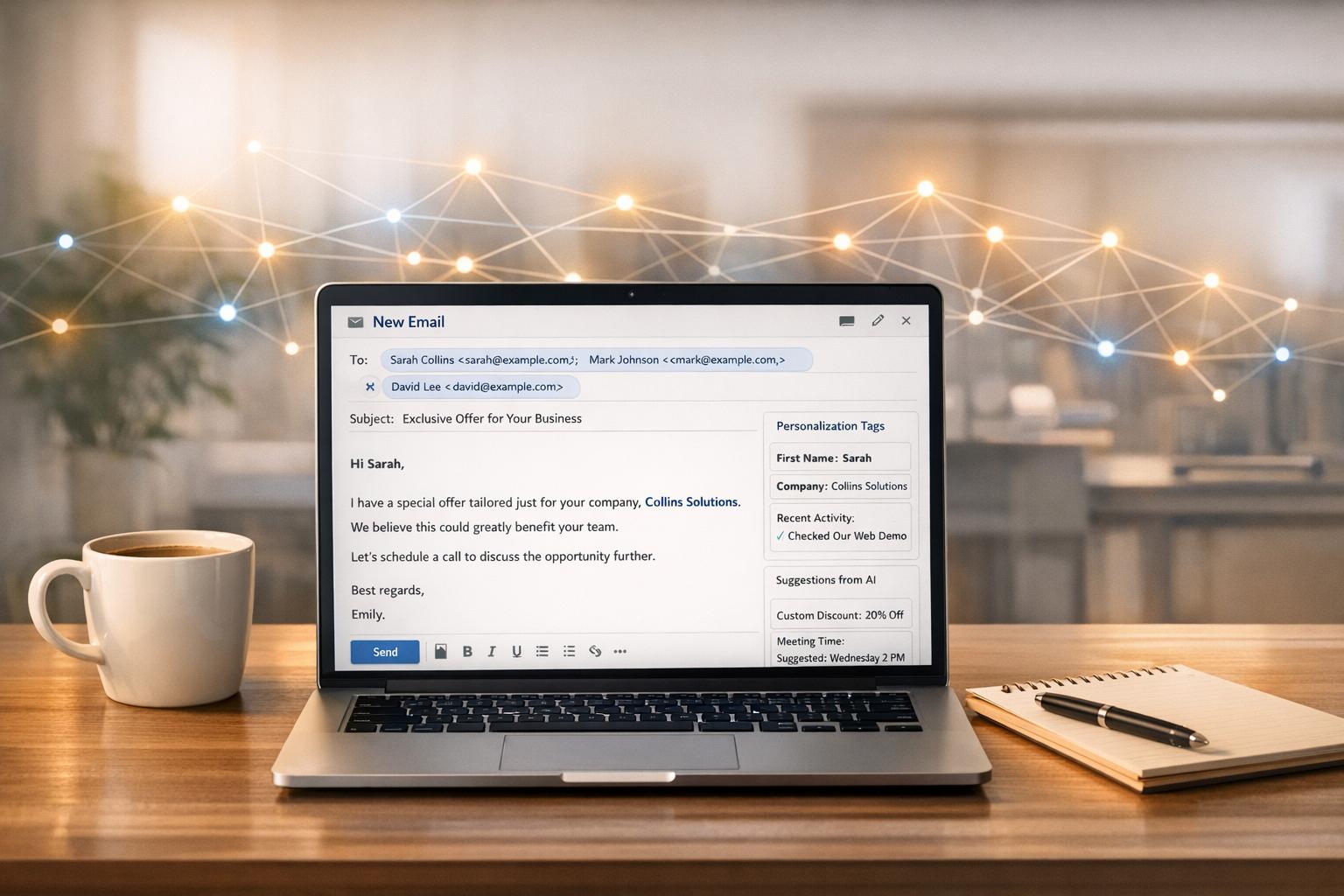

- Personalization: Tailor CTAs to the recipient's context. For instance, a generic "Request Demo" might not perform as well as "Request a Demo for [Recipient's Industry]." Including details like the recipient’s company name or role can make the CTA feel more relevant.

Create a Control Version and Test One Variable at a Time

Your control version acts as the benchmark for comparison. If you’ve never tested before, start with your current CTA or the best-performing one you’ve used.

Document every detail of your control CTA - text, color (hex code), font size, placement - so you can track what changes drive results. This record-keeping ensures you can replicate what works and identify what doesn’t.

Focus on testing one element at a time. For instance, if you change both the button text and its color in the same test, you won’t know which adjustment made the biggest difference. Start with impactful elements like button text or color before moving on to subtler tweaks like font size or border styles.

Make sure your sample size is large enough to produce reliable results. A statistically significant segment of your email list will give you confidence in the outcomes. Also, consider the timing of your test. Email engagement can vary during the week, so running your test over a full business week can provide a clearer picture. Avoid periods of unusual activity, like holidays or major industry events, which could skew results.

Finally, keep detailed records of each test. Document your hypothesis, the variations you tested, and the conditions during the test period. These records will not only help you analyze the results but will also serve as a valuable resource for refining future CTA strategies.

How to Run CTA Tests: Methods and Best Practices

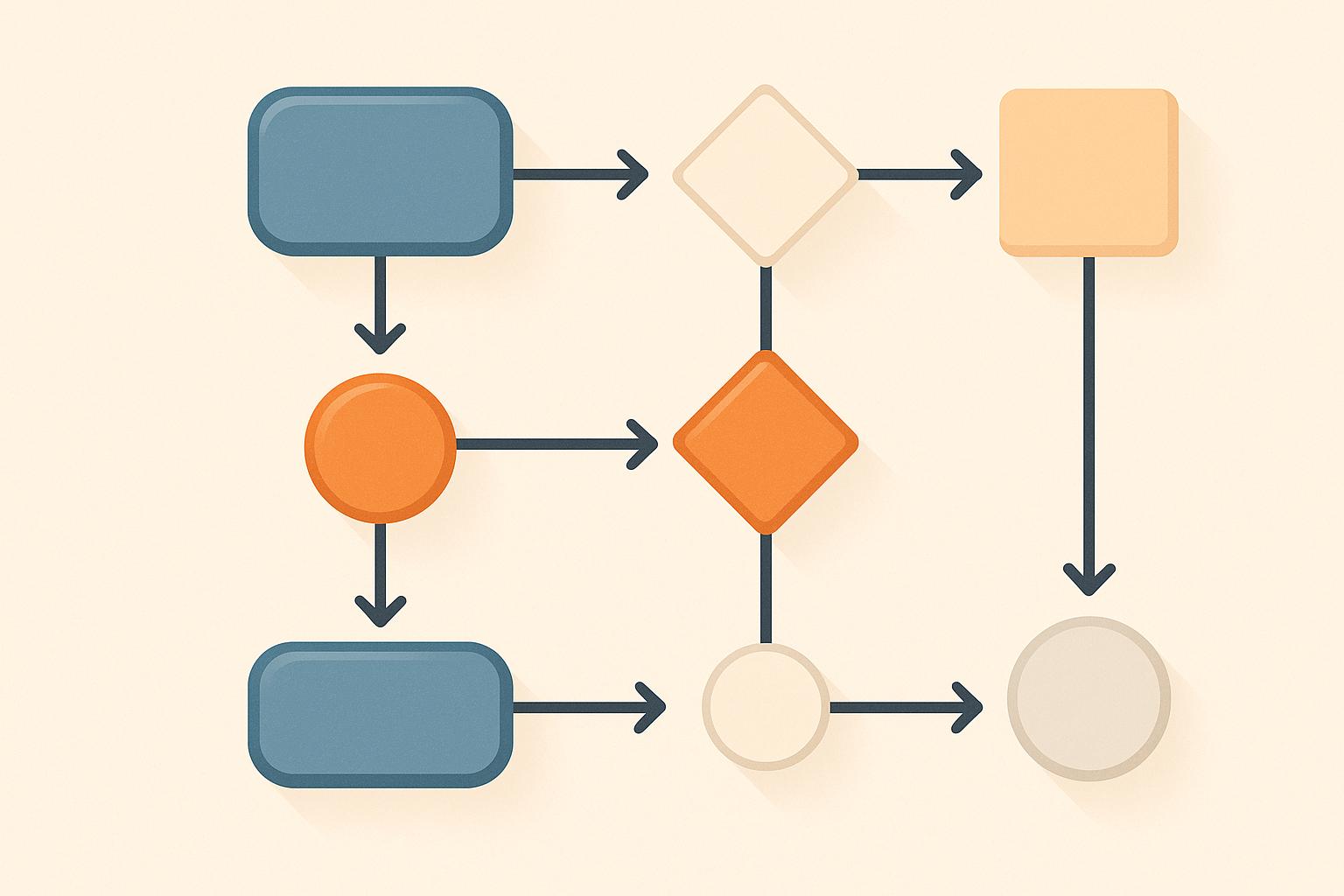

When it comes to running a CTA test, the way you design and execute it can make or break the quality of your insights. Let’s break down the differences between A/B and multivariate testing and go over some practical tips to ensure your tests are set up for success.

A/B Testing vs. Multivariate Testing

Once you’ve mapped out your test plan, the next step is choosing the right testing method for your goals.

A/B testing is the simpler option. You split your email list into two groups, with each group receiving a different version of your CTA. This method works best when you’re testing a single variable - like the text on your button or its color. For example, you might compare “Book Your Consultation” to “Schedule a Meeting” to see which resonates more with your audience. A/B testing is relatively easy to analyze and doesn’t require a massive sample size to get meaningful results. That said, the exact sample size will depend on your audience and campaign.

Multivariate testing, on the other hand, lets you test several elements at once by creating multiple combinations of variations. This can help you uncover how different elements interact with one another. For instance, you might test three button texts, two colors, and two placement options, resulting in 12 combinations. While this approach provides deeper insights, it demands a larger email list and more advanced analysis to draw accurate conclusions.

Best Practices for Test Design

Now that you know the methods, here’s how to design a test that produces reliable results.

- Calculate your sample size: Before starting, figure out how many people you need to include in your test to ensure the results are statistically reliable. This helps avoid running a test that’s either too small to detect meaningful differences or unnecessarily large and resource-intensive.

- Randomize your groups: Make sure your email list is randomized so that each test group is evenly balanced in terms of demographics and engagement levels. Uneven groups can distort your results.

- Choose the right test duration: Run your test long enough to capture a variety of engagement patterns. For most campaigns, a full business week is ideal, but this can vary depending on your email volume and audience behavior.

- Send emails at the same time: Timing matters. If you send one version in the morning and another in the afternoon, you might end up measuring the effects of timing rather than the CTA itself.

- Avoid testing during disruptions: Skip running tests during holidays, major industry events, or company announcements. These factors can influence engagement and muddy your results.

- Stick to your plan: It’s tempting to call a winner early, especially if one version seems to be pulling ahead. But resist the urge until your test hits the planned sample size and duration. Early performance differences can be misleading.

Finally, keep everything consistent except for the CTA. Use the same email template, subject line, and content for all variations. And if something goes wrong - like a broken link - fix it across all versions to maintain the integrity of your test. Monitoring your test is important, but making mid-test changes can compromise your results.

sbb-itb-8889418

How to Analyze CTA Test Results

Once your test wraps up and the data is in, it's time to dive into the numbers. Analyzing the performance of your call-to-action (CTA) is where the magic happens - transforming raw data into insights that can sharpen your next campaign. The key here? Knowing which metrics to focus on and interpreting them correctly. That’s how you avoid missteps and make informed choices.

Key Metrics to Track for CTA Tests

The metrics you track should tie directly to the goals you set at the start. Here are the most important ones to keep an eye on:

- Click-through rate (CTR): This is often the go-to metric for CTA tests. It measures how many people clicked on your CTA, giving you a quick snapshot of engagement.

- Conversion rate: This goes beyond clicks, showing how many people completed the intended action after clicking. Whether it’s filling out a form, downloading a resource, or making a purchase, this metric is especially valuable for B2B marketers since it connects directly to lead generation and revenue.

- Revenue per email: If your CTA is tied to sales or high-value actions, this metric is a must. Calculate it by dividing the total revenue generated by the number of emails sent for each test variation. It’s a direct way to measure the financial impact of your changes.

- Unsubscribe rates: A spike here could signal that your CTA isn’t resonating or is causing frustration. Quality clicks shouldn’t come at the expense of losing subscribers.

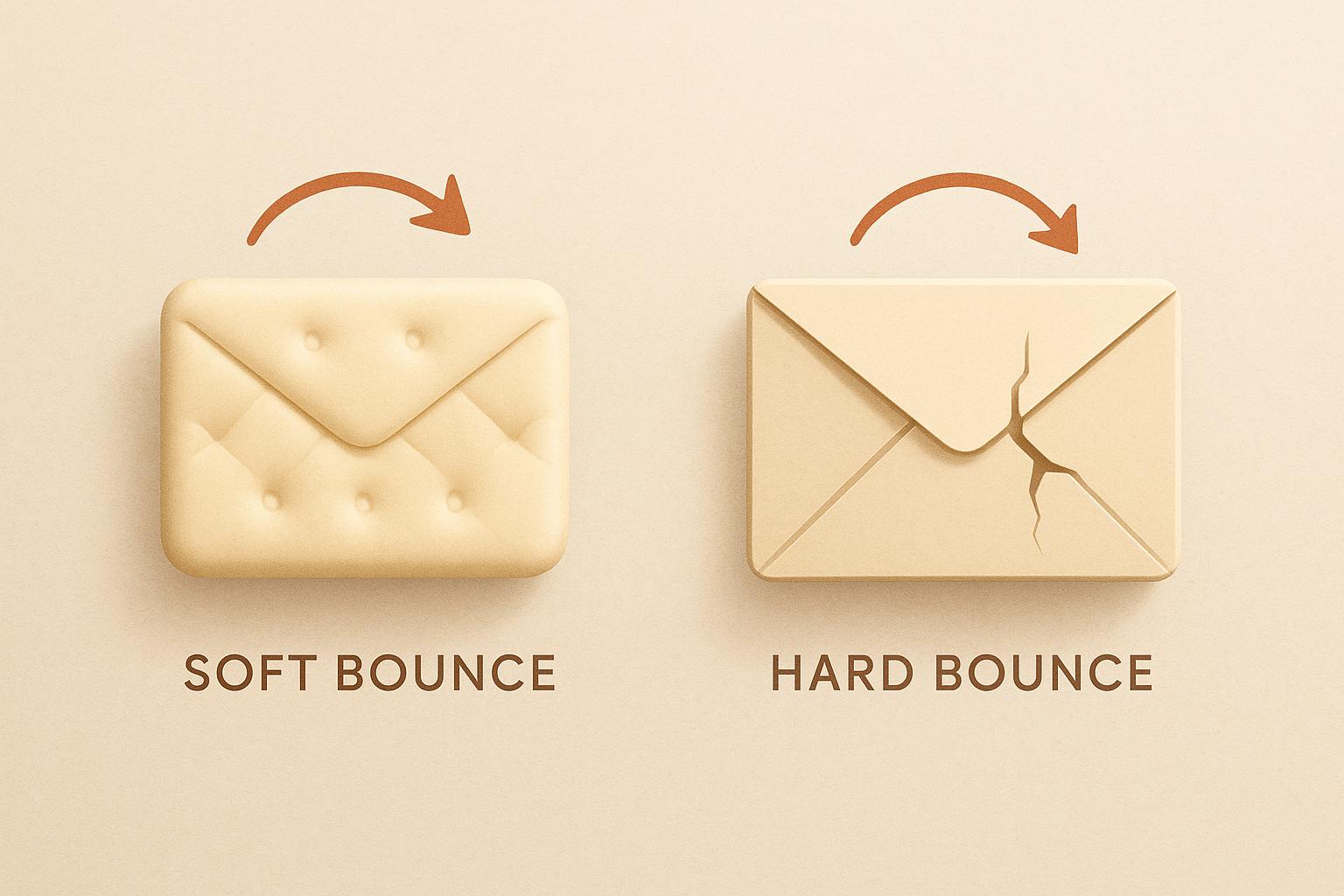

- Bounce rates: If people land on your page and leave quickly, it might mean your CTA isn’t aligning with what they expected.

- Time to conversion: This metric shows how quickly people act after engaging with your CTA. Faster conversions might indicate clear messaging or urgency, while slower ones could point to gaps in your follow-up strategy.

These metrics help you measure success and set the groundwork for deeper analysis.

Statistical Significance and Confidence Intervals

Before calling a winner, you need to confirm your results are reliable - not just a fluke. That’s where statistical significance comes in. It tells you whether the differences between your test variations are meaningful or just random noise.

- Aim for a 95% confidence level to ensure your results are solid. This typically means a p-value of 0.05 or lower. There are plenty of online tools to help calculate this, but patience is key - you need enough data to draw reliable conclusions.

- Sample size is critical. The smaller the difference between your test variations, the larger your sample size needs to be. For instance, if your control CTA has a 5% CTR and the test variation hits 6%, you’ll need a significant number of recipients to confirm that 1% bump is real.

- Confidence intervals add another layer of understanding. Instead of saying, “Version B had a 6% CTR,” you could say, “Version B’s CTR is between 5.2% and 6.8% with 95% confidence.” It’s a more precise way to interpret your findings.

Resist the urge to jump to conclusions too early. A variation that looks like a winner after 100 clicks might not hold up after 1,000 clicks.

Present Test Results with Charts and Tables

Clear presentation of your results is just as important as the analysis itself. Use visuals to make your findings easy to understand and actionable. Here’s an example of how to structure your data:

| Metric | Control CTA | Test CTA | Difference |

|---|---|---|---|

| Click-through Rate | 4.2% | 5.8% | +1.6% |

| Conversion Rate | 12.3% | 16.7% | +4.4% |

| Revenue per Email | $0.23 | $0.31 | +$0.08 |

| Unsubscribe Rate | 0.8% | 0.9% | +0.1% |

- Bar charts are great for quickly comparing performance across variations. They make it easy to spot which version came out ahead.

- Time-series charts can show how performance trends evolved during the test. For example, you might notice one CTA performed better on weekdays while another excelled on weekends, offering insights for future timing.

When sharing results, always provide context. Were there external factors, like holidays or industry events, that might have influenced outcomes? Include details like sample sizes, test durations, and confidence levels so others can assess the reliability of your conclusions.

Finally, don’t forget to segment your analysis. What works for one audience segment might not work for another. For example, a CTA that resonates with new subscribers might fall flat with long-time customers. These insights can guide more targeted strategies moving forward.

Using Breaker for Better CTA Testing

When it comes to improving your call-to-action (CTA) testing, having the right tools can make all the difference. Breaker streamlines the process, offering real-time insights and reliable email deliverability - essentials for B2B marketers aiming to boost conversions.

Track CTA Performance with Breaker's Real-Time Analytics

Breaker’s real-time analytics give you instant access to data that can transform your CTA testing. Instead of waiting days or weeks for results, the platform’s dashboard delivers immediate insights into key metrics like click-through rates, conversion rates, and overall engagement.

This instant feedback enables you to identify trends early and adjust your tests on the fly. You can see how different audience segments respond to various CTAs, pinpoint the best times for engagement, and monitor deliverability metrics that influence your outcomes. Breaker doesn’t just stop at surface-level metrics like open rates; it digs deeper into subscriber behavior, helping you understand why certain CTAs perform better.

This is especially useful for time-sensitive campaigns or seasonal promotions. You can quickly spot underperforming variations and shift traffic to the winning CTAs, ensuring your campaigns remain as effective as possible.

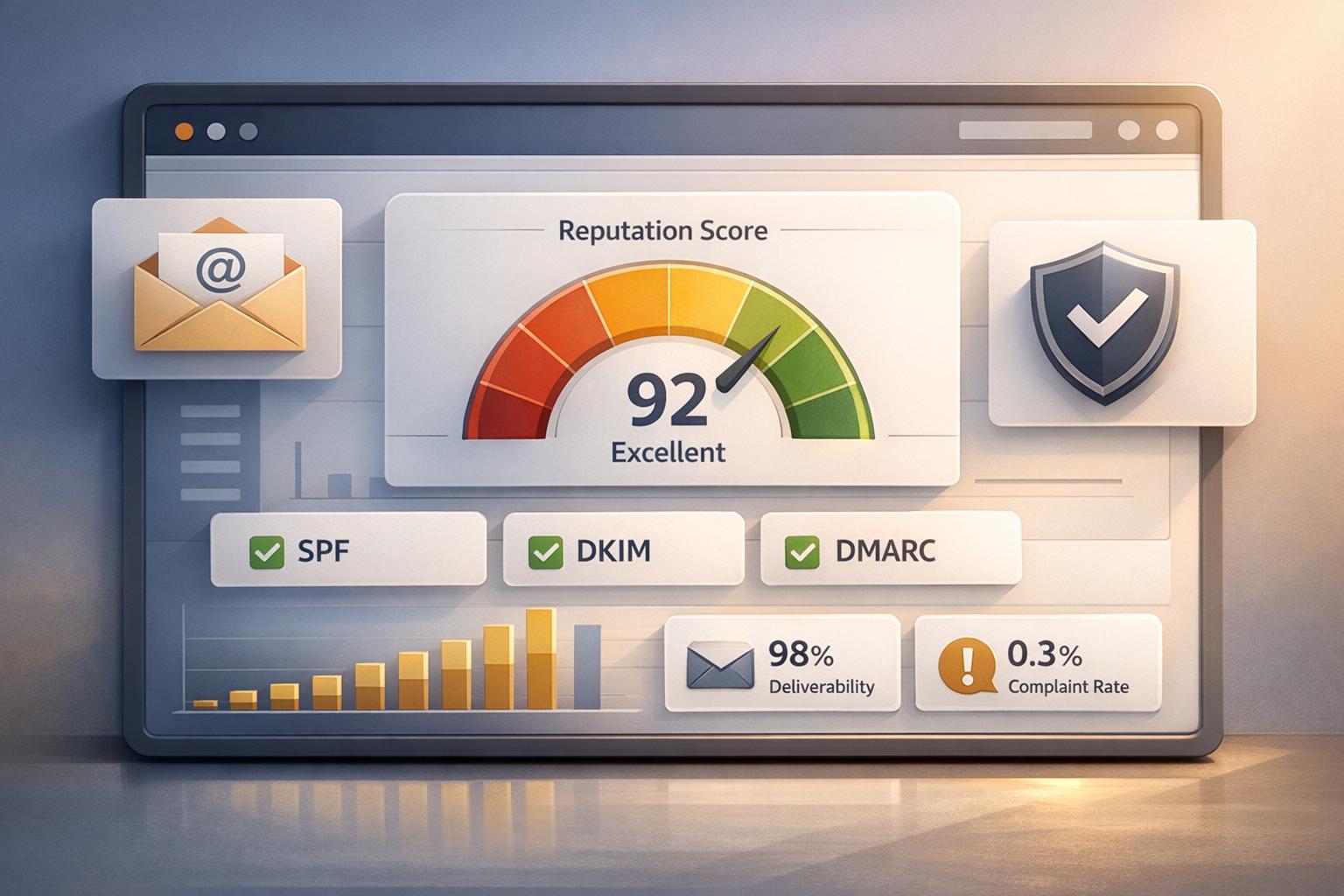

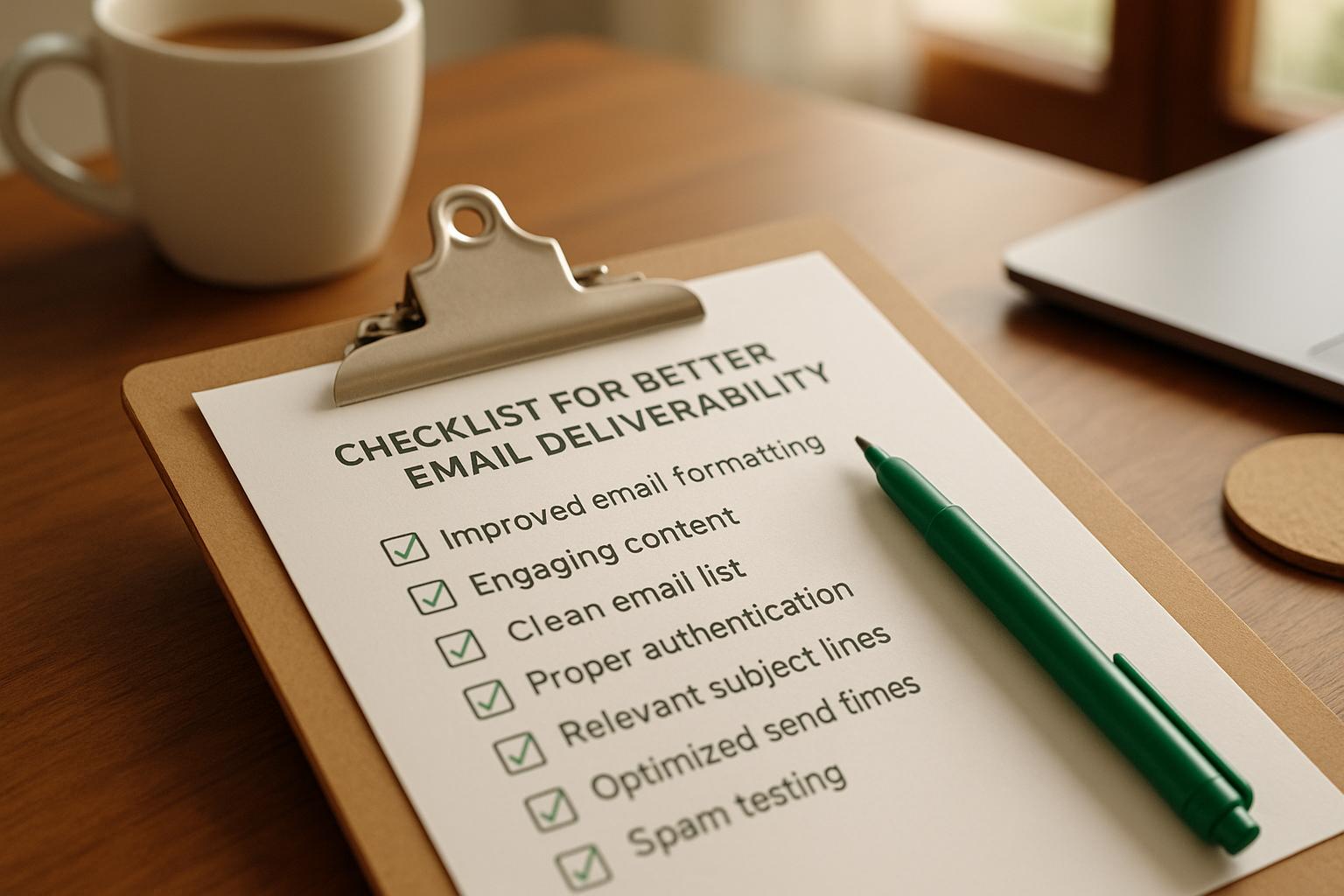

Improve Deliverability and Targeting with Breaker

Even the most well-crafted CTA test can fail if emails don’t reach their intended audience. Breaker tackles this challenge with its robust email marketing infrastructure, ensuring both high deliverability and precise targeting.

The platform’s deliverability is managed by a team of experts who handle the technical details for you:

"Our team of experts manage all mail streams on the back end for you. With a unique combination of sending logic, list hygiene, and reputation monitoring, we ensure your emails land in the inbox".

This means your emails are far less likely to end up in spam folders, giving you accurate test data that reflects genuine subscriber preferences.

On the targeting side, Breaker employs an algorithm designed to attract your ideal customer profile (ICP). By automatically delivering highly engaged, B2B subscribers who match your ICP, Breaker ensures that your CTAs reach people who are genuinely interested in your offerings. This precision leads to more meaningful test results and higher conversion rates.

Connect Breaker with Your Current Workflow

Breaker’s ability to integrate seamlessly with your existing tools makes it an even more powerful asset for CTA testing. Its user-friendly newsletter builder allows you to easily deploy test variations, while CRM integrations help you sync subscriber data, track conversions, and maintain consistent records across your marketing stack.

This compatibility means you can continue using your favorite analytics tools and platforms while taking advantage of Breaker’s specialized email marketing features. By combining newsletter creation, automated audience growth, and managed deliverability, Breaker frees you to focus on crafting effective CTAs and analyzing results without worrying about technical hurdles.

Conclusion: Get the Most from CTA Testing

CTA testing is a cornerstone of email marketing success, going far beyond surface-level tweaks to truly boost engagement. As discussed, achieving meaningful results requires careful planning, precise execution, and thorough analysis. By setting clear goals, following a structured testing process, and turning insights into actionable strategies, you can transform your data into tangible growth.

To execute effectively, focus on the essentials: ensuring proper sample sizes, allowing sufficient test durations, and understanding statistical significance. Jumping to conclusions too soon or testing multiple variables at once can undermine the reliability of your results. Metrics like click-through rates, conversion rates, and revenue per email provide the insights needed to measure success, while a solid grasp of statistical significance ensures your decisions are based on real trends, not random noise.

Tools like Breaker's real-time analytics and targeting capabilities take your CTA testing to the next level. By eliminating delays and ensuring tests reach highly engaged prospects aligned with your ideal customer profile, you can make faster, more informed decisions. Incorporating these insights into your strategy helps drive consistent, long-term growth.

FAQs

What are the key CTA elements to test for boosting email engagement in B2B marketing?

To boost email engagement in B2B marketing, it's worth experimenting with key elements of your call-to-action (CTA). Here's what to focus on:

- Use clear, action-oriented language: Keep your wording short and impactful with verbs that prompt immediate action, like "Download Now" or "Start Your Free Trial."

- Place your CTA strategically: Make it easy to spot by positioning it prominently, such as at the top of the email or following key sections of your content.

- Make it visually appealing: Play around with button size, colors, fonts, and spacing to ensure the CTA grabs attention without clashing with the overall design.

- Align it with your content: The CTA should naturally fit the tone and purpose of the email, so it feels like a logical next step for the reader.

By testing different approaches to these elements, you can uncover what connects best with your audience and drives more clicks and conversions.

How do I make sure my CTA test results are accurate and meaningful?

To get reliable and meaningful results from your CTA tests, start by ensuring your sample size is large enough to truly reflect your audience. Aim for a confidence level between 90% and 95%, which helps reduce the likelihood of errors. Also, let the test run long enough to capture natural fluctuations in engagement - cutting it off too soon, even if the early data looks promising, can lead to misleading outcomes.

After completing the test, check that the results are statistically significant before declaring a winner. This step is crucial to ensure your conclusions are solid and can guide your future campaigns effectively.

Why is email deliverability important for effective CTA testing, and how does Breaker help improve it?

Email deliverability plays a key role in effective CTA testing. If your emails don’t make it to your audience’s inbox, even the most compelling CTAs won’t get the chance to perform. Reliable deliverability ensures your messages reach the right people, boosting engagement and response rates.

Breaker enhances deliverability through a combination of accurate audience targeting, real-time performance tracking, and refined email delivery methods. These strategies work together to ensure your emails are not only seen but also acted upon, making your CTAs more impactful.